Today we have security themes native to the US, a continuation (and probably already a summary) of the 1BRC theme and an interesting case from Netflix

1. White House recommends using languages with secure memory management – including Java.

Today we will start with a very interesting announcement, straight from the White House

US President Joe Biden’s administration is urging developers to use memory-management-safe programming languages and abandon vulnerable languages such as C and C++. In a released report, the Office of the National Director for Cyber Security (ONCD) urges developers to reduce the risk of cyber attacks by using programming languages that protect against memory-related security issues such as buffer overflows, out-of-bounds reads and memory leaks. The report highlights that around 70% of all security vulnerabilities are caused by memory security issues, and a shift to memory-safe programming languages can prevent entire classes of vulnerabilities from being introduced into the digital ecosystem.

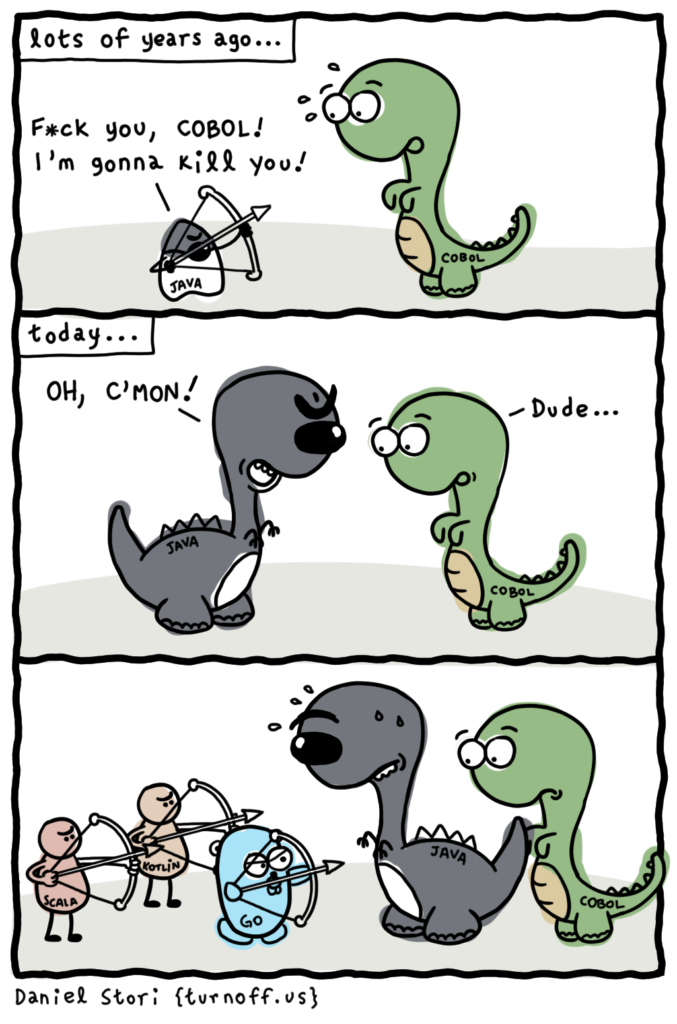

The new ONCD report lists Java as an example of a secure programming language, while C and C++ are given as examples of languages with memory security vulnerabilities. The NSA’s cybersecurity briefing also lists C#, Go, Rust, Ruby and Swift as languages considered safe in terms of memory management. The report aims to shift responsibility for cyber security from individuals and small businesses to larger organisations and the US government, which are better equipped to manage ever-evolving threats. Experts emphasise that the transition from C and C++ to other languages will be a long and difficult process, but existing alternatives, already gaining popularity, could accelerate the evolution towards more secure coding. The recently released Foreign Function and Memory (FFM) API, although not mentioned by name, fits very well into this trend.

And as the subject of Rust has come up, I can’t resist tossing you a thumbnail of Java is becoming more like Rust, and I am here for it!. Rust has become the gold standard for some (which is probably a rather controversial statement for others) but Java is also evolving, as I don’t think I need to convince any of the readers of this newsletter. Over the years, the languages are getting closer and closer in many ways Examples of such innovations cited by the article are non-mutated Records and Sealed Interfaces, making it easier to model complex data types. As a result, we have more and more constructs in our language from the family of so-called algebraic data types, enabling precise representation of states and behaviours and so-called Data-Oriented Programming. A short food for thought.

And if you want to get a better understanding of what all this DOP is about, I recommend Adam Bien’s podcast and the episode From Hexagonal Architectures to Data Oriented Programming, in which he hosts Jose Paumard and they work out the topic together.

2. This is probably the last time…. but we’re back on the subject of 1BRC

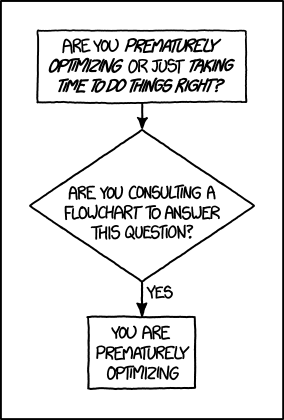

The One Billion Rows Challenge (1BRC), focusing on optimising Java code to process 1 billion rows of data from a weather station as quickly as possible and calculating the minimum, average and maximum temperature, is long overdue. However, it continues to fire the imagination of the community, which has recently produced a mass of articles summarising the techniques used in the challenge. I have therefore decided to take another look at the issue and summarise the most interesting ones.

The first piece is an article by Mark Topolnik of QuestDB, who details his adventure through the challenge – suffice to say that his ‘naive’ version of the Java Streams-based solution initially took 71 seconds to process. The list of later optimisations described includes tricks such as parallel I/O processing or direct temperature parsing to integers, through the use of custom data structures for data (which also ‘make a difference’), to low-level optimisations such as using sun.misc.Unsafe for memory access and SWAR (SIMD Within A Register) techniques for fast data processing. Final optimisations, meanwhile, include (on the one hand) reductions in the start-up of the application itself, and on the other, attempts to use ‘branch prediction’ mechanisms.

It is worth mentioning here that Mark Topolnik is not just describing his experience here – the whole challenge took place within the new PRs on GitHub, so the improvements of individual participants were quickly adapted by the rest of the community. It was Mark, however, who described the whole thing in a very accessible, easy-to-understand way. He is not just chronicling it here, however – he finished the challenge in the top ten, in a very high seventh position.

At the time of the 1BRC, the question was asked – do LLMs here have any head start on experienced engineers (well, after all, it’s 2024, what are we talking about). The whole thing was tested by Antonio Goncalves, who attempted a 1BRC using GitHub’s Copilot Chat to explore how it could help in such a specific case of optimising application code performance. Primarily using Copilot Chat to ask questions and receive answers directly in the IDE environment, Antonio focused on subsequent fixes to the original algorithm (which took 4 minutes and 50 seconds to complete). With the iterations, which he, like Mark, describes step by step, Antonio managed to run his code under a minute on his Mac M1, which, as you can easily count, was a significant achievement compared to the base algorithm. Although the author had an appetite for more, he went on to rate GitHub Copilot Chat as quite valuable, as he claims the tool not only sped up the development of a functional algorithm, but also made it easier to optimise and adapt to the JVM environment.

But 1BRC also gained the interest of those not very advanced in Java (or at least not enough to race for the top spots), so we got some other interesting publications. For example, Gerald Venzl and Connor McDonald looked at how to efficiently store datasets similar to the one presented in 1BRC and do that analytics on Database side . They focused on the use of a database and analysed how a database server can be scaled to process data quickly. Gerald first went below 5 seconds in his 1 billion row challenge in SQL and Oracle Database (also describing the whole thing in detail step-by-step), and then Connor, using In-Memory technology to speed up analytical queries and appropriate parallelism degree settings, managed to process a billion rows in less than a second…. on a standard laptop with 16GB of RAM (although I couldn’t dig up what model it was). Madness.

And finally – Go. Canonical’s Ben Hoyt decided to describe his solution in Go and compare it to Java to shed light on the differences in the ecosystems of the two languages, especially in terms of optimisation and performance. The text also has the advantage that the author (like his predecessors anyway) takes the reader through iterations of his approach. Ben focused on using the standard Go library without resorting to low-level operations or external tools such as memory-mapped files. Through iterative optimisations, the author managed to reduce the processing time from 1 minute 45 seconds (naive solution) to just 3.4 seconds. The author concludes that by using advanced techniques and JVM-specific optimisations (where the fastest solution achieved a time of less than a second), it can offer higher performance. Go, with its emphasis on simplicity and readability, further provided a very fast solution it is super easy to understand, even for someone who does not program in the language on a daily basis.

3. Netflix shares experience of using ZGC

More than once I am confronted with the question of who is actually using all these new Java functionalities and whether there are any casses showing how well they work in a production environment. Only a week ago I wrote that the JDK developers were slowly thinking about getting rid of the generational variant of ZGC, leaving only the generational variant just introduced in JDK 21, but it’s hardly surprising. Looking at Netflix’s experience, the new version has worked out better than well for them.

Netflix has indeed shared the effects of its move from G1 to Generational ZGC. The article Bending pause times to your will with Generational ZGC shows the change has significantly improved the performance of key video streaming services, with more than half of them now running on JDK 21 and benefiting from a reduction in P99+ request latencies (at Netflix’s scale, even further percentiles are still a huge volume of users) and the elimination of interrupts caused by garbage collection. By minimising these interruptions, Netflix has not only reduced the number of timeouts, but also gained clearer insight into the actual sources of latency, allowing them to further optimise the performance of the service.

Interestingly, Danny Thomas, the author of the article, points out that this involves virtually no trade-offs, which is something of a rarity in software engineering. Indeed, the adoption of Generational ZGC has also brought operational efficiencies, with Netflix’s JVM Ecosystem Team now seeing the same or even better CPU utilisation for the same workload compared to G1. There are also no expected compromises in application throughput, and this is true even on the ZGC’s default configuration, which requires no overt tuning and with its ability to handle a variety of workloads with consistently good performance. While acknowledging that ZGC may not be suitable for all workloads, especially bandwidth-oriented workloads with sporadic allocation rates, this is rather looking for a hole in the whole. The Netflix case shows that the developers of Generational ZGC have indeed done a good job with the recent changes. Every time you have your Binge Watching session, you can have it in the back of your mind that your experience is slightly better just because of ZGC.

And finally something private from me: I had the opportunity along with Frank Delporte to be a technical reviewer of Otavio Santana new book Mastering the Java Virtual Machine – and it’s a very good book, so I’m sharing it with my network.

In his work, Otavio tackles the challenging topic of bringing readers closer to the technological and business horizon associated with the Java Virtual Machine. This is a rather niche subject, which in my opinion, should not be – because the JVM is perhaps the most interesting aspect of the entire Java ecosystem and its family languages – you may have noticed by reading this newsletter that this is my cup of tea.

PS: This is not a paid promotion (shame that it always has to be added on the internet), the topic is simply really interesting, and since I had the opportunity to get acquainted with the whole thing at a very early stage and I was able to add at least tiny bit to the overall experience, I’m sharing the good news further!