Welcome to 2024 🥂! But before we move forward, let’s recall with historical diligence what happened in 2023!

The year we got JDK 21

2023 was memorable mostly due to JDK 21. This was the year that Java reclaimed its place in the programming world, largely due to a plethora of anticipated new features, with virtual threads leading the pack. The buzz around these was widespread, with frameworks vying to incorporate them (a topic we’ll revisit later), but the array of other practical features was equally noteworthy. There were so many that I won’t detail them all here (we have a lot of different topics today), but I encourage you to read the article I wrote at the time of the launch, which summarizes everything: A one-sentence summary of each new JEP from JDK 21. Besides the one-sentence summary mentioned in the title, each new JEP also received a set of additional links to give you a sense of the conversation surrounding the new release.

Well, now that we’ve got the obvious behind us, it’s time to move on to the things you REALLY had a chance to miss 😇.

The year GraalVM EE died and GraalOS was born

2023 is also notable for the significant transformation of GraalVM ecosystem. The initial major shift involved licensing issues, a process that started in late 2022. Historically, GraalVM offered two versions – Community (CE) and Enterprise (EE). At the JavaOne 2022 event, it was revealed that GraalVM CE would be incorporated into OpenJDK, as part of the Galahad project. In the middle of 2023, a similarly substantial decision was made regarding the Enterprise Edition. It was substituted by Oracle GraalVM, a fresh distribution with a licensing model called GraalVM Free Terms and Conditions (GFTC). This alteration in licensing simplifies the use of the most recent version of Oracle GraalVM at no cost in both development and commercial settings, a considerable advancement from the earlier licensing limitations.

Another significant addition is the launch of Graal Cloud Native (GCN). This is a unique variant of the Micronaut framework modules (more on Micronaut will be discussed later), engineered to develop microservices in a manner that is entirely compatible with the GraalVM Native Image compilation. Essentially, it can be regarded as the inaugural comprehensive GraalVM-First framework.

Also in 2023, GraalOS, a new runtime environment for cloud applications was introduced. It focuses on enhancing serverless features by enabling them to run on bare metal servers, thanks to GraalVM. GraalOS employs ahead-of-time (AOT) compilation to transform applications into standalone native executables. This process eliminates cold starts and enhances the performance of serverless applications. In theory, GraalOS is intended to be compatible with any environment. However, it is specifically designed to integrate with Oracle Cloud Infrastructure Functions and aims to provide a competitive edge for that environment. Whether it will become a compelling argument for an Oracle solution remains to be seen.

It’s important to point out that AWS Lambda already offers support for GraalVM in what’s known as a custom execution environment. However, this basically requires running a container with a GraalVM application. GraalOS offers a solution to this layer, removing the necessity to run the container and start the JVM.

Besides the aforementioned modifications, Truffle has undergone evolution as well. This metaframework, enabling the creation of programming language interpreters to operate within GraalVM, is now independent from the main platform. The interpreters for Python or JavaScript are now accessible as individual packages. These can be loaded as a straightforward project dependency, avoiding the need for the custom tooling that was previously necessary.

Details:

- GraalVM EE is Dead, Long Live Oracle GraalVM – JVM Weekly vol. 47

- What does GraalVM for JDK 21 have in common with the Rabbit of Caerbannog? Both surprise with their power – JVM Weekly vol. 58

The year MicroProfile joined forces with Core Profile

Now, we are about to rewind a complete year. After all, MicroProfile 6.0 marked the first significant launch of 2023. Besides introducing a fresh batch of standardized APIs, it also clarified the current status of the project’s relationship with Jakarta EE, something that was eagerly awaited.

With the introduction of Jakarta EE 10, a new feature known as the Core Profile was introduced. This is a collection of APIs that developers believe to be the bare minimum required for creating microservices in Java. This objective closely mirrors that of the MicroProfile developers, leading to initial speculation about the impact of the Core Profile on it. It seemed logical that some form of synergy would occur, but it was made clear from the beginning that these two initiatives were not expected to merge. Despite this, the creators have reassured that they maintain regular communication and a friendly relationship with each other.

Before MicroProfile 6.0 was launched, the standard’s approach was to select from Jakarta EE the APIs that the project developers believed would be most beneficial to the MP user. This was the situation with MicroProfile 5.0.

- Jakarta Contexts and Dependency Injection (CDI)

- Jakarta Annotations (JPA)

- Jakarta RESTful Web Services (JAX-RS)

- Jakarta JSON Binding (JSON-B)

- Jakarta JSON Processing (JSON-P)

Since the release of MicroProfile 6.0, the project has made an alignment with Jakarta Core Profile. This means that instead of having a dependency on individual APIs, it will depend on the whole Profile, and the following APIs (in addition to already being part of the MicroProfile) will also be added transitively:

- Jakarta RESTful Web Services

- Jakarta Interceptors

For clear reasons, MicroProfile now supports a minimum version of Jakarta EE 10.

Details:

The year Testcontainers became a part of Docker

While it’s fairly typical to see funding announcements for JavaScript infrastructure projects (Vercel, Deno) – a trend that has been particularly noticeable in 2021 and 2022 – it’s less common to hear about a Java-based libraries establishing its own cloud (ofc unless we’re considering Data space with entities like Confluent or DataStax). Therefore, I found it quite delightful to keep up with this year’s announcements from AtomicJar.

To highlight the allocation of resources, let’s revisit what AtomicJar is. Have you heard of TestContainers? This is a support library for JUnit, offering lightweight, disposable versions of well-known databases, web browsers for Selenium, and essentially anything that can be effortlessly operated in a Docker container. Despite some individuals possibly complaining about operating containers in unit tests, the widespread use of TestContainers demonstrates the prevalence of this scenario.

In February, AtomicJar secured a round of $25 million. The purpose of this funding was to advance the development of TestContainers Cloud, a Software-as-a-Service (SaaS) solution that enables containers to operate in the cloud instead of locally. This was designed to simplify processes for developers, particularly in situations involving heavier test setups and hardware-specific versions of software.

The subsequent phase involved the launch of Testcontainers Desktop, a software engineered to accelerate test initiations via functionalities like static ports and the capacity to halt containers for debugging objectives. Testcontainers Desktop served as a comfort for individuals who continue to favor a local testing environment, simultaneously providing access to a demo version of Testcontainers Cloud.

The pinnacle event of the year was the acquisition of AtomicJar by Docker in December. This deal held strategic significance for both Docker (which made a daring entrance into the Cloud DevEnvironments market) and Tescontiners, as it could potentially broaden the user base of their solution. By collaborating with Docker, they are set to transcend the Java realm and establish themselves as a market standard.

Let’s hope for more stories like this in 2024.

Details:

- What does the “State of Developer Ecosystem 2022” tell us about Java and the JVM ? – JVM Weekly #32

- Java, Kotlin, Scala: Insights from State of Developer Ecosystem 2023 – JVM Weekly vol. 62

- Docker acquires AtomicJar, company behind Testcontainers – JVM Weekly vol. 64

The year Spring indirectly became a part of Broadcom.

While I welcome the above acquisition very optimistically, not every announcement of this kind is cause for celebration. It will come as no surprise to anyone that large open-source projects these days usually have corporate sponsors, and many of the people developing them do so as part of their corporate jobs. Therefore, the changes in these companies can have a huge impact on their development.

Pivotal Software (associated with Spring) and VMware share a robust historical and business bond, which peaked in 2019 when VMware acquired Pivotal. In 2023, VMWare itself was subject to further acquisitions – Broadcom, primarily recognized for its semiconductor production, chose to venture into the software market. VMware was their choice, given its reputation as a leader in virtualisation and cloud infrastructure, which Broadcom found to be a promising area for expansion. The acquisition, one of the biggest of its kind at $69 billion, started in May 2022 and was finally concluded last week, on 22 November.

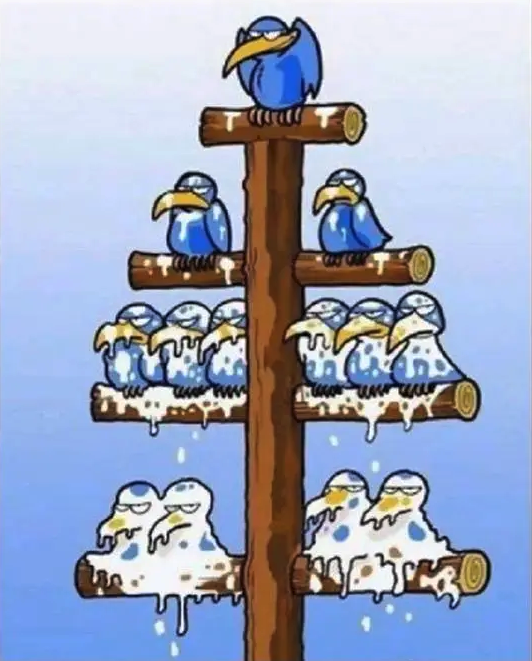

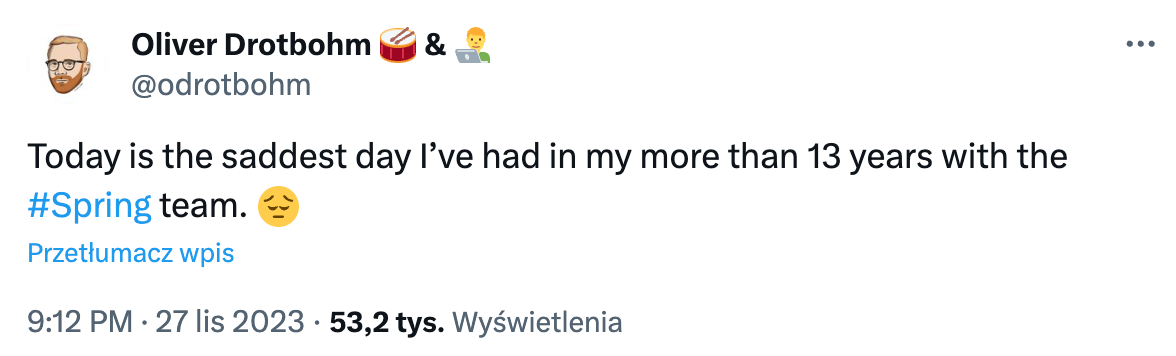

Shortly after the agreement, the company experienced a series of job cuts. The full scope of these layoffs is unknown, but it’s clear that they impacted the team in charge of the most widely used Java framework. Oliver Drotbohm, a Spring architect who has been at the helm of the Spring Data project for over ten years, encapsulated the situation perfectly.

Details:

The year Oracle revolutionizes the pricing of their JDK version.

As I mentioned at the very beginning, apart from exceptional occasions like JDK 21, Java very rarely makes its way into the broader discussion of the development community. While we (you, readers of the newsletter and I) may be interested in VM changes and other ecosystem news, someone ‘uninvested’ in the ecosystem mainly hears about Java when there is internet ‘drama’. And there was one at the beginning of February, all because Oracle decided to change the way it charges for its JDK. So I can’t help but devote space to this fact in my summary of the year.

On January 23, Oracle declared a shift in the Oracle JDK licensing model to Java SE Universal Subscription. Instead of being dependent on workstations and processors, the new pricing is determined by the company’s employee count: for those with up to 1,000 employees, the charges are $15/month, and for larger companies, it’s $5.25/month. The calculation now includes not just developers, but all company employees – even those not in the IT department, and contractors, for instance.

The changes do not apply to paying customers, who will be able to settle on previous terms (although I have not been able to dig into how the extension of the licence, for example, will be resolved). Ending on a positive note, the provisions could theoretically benefit some companies, especially small strictly tech startups with small numbers of employees. Enterprise probably lose out in any configuration, but small, rapidly scaling ones may gain – although the fact of high per-employee fees ($15/month) in companies with less than 1,000 employees may be at odds with this theory.

It should be remembered that Oracle is not the only JDK provider, so anyone wanting to use Java for free can use Adoptium, for example. Which is sad – it’s well known to people familiar with the Java ecosystem, but to the general public it’s a heavily unintuitive state of affairs. After all, there are no alternative distributions of Go or Rust (or they are statistically negligible).

Details:

The year of beginning of the end of the null problem in Java thanks to the Valhalla project.

The year 2023 marks another cycle of iterations for Valhalla. Interestingly, it may provide the enhancements that developers have been anticipating for a long time. One anticipated feature of the language that Valhalla is set to introduce are Value Types. The primary difference between a ‘reference type’ (those currently in the language) and a ‘value type’ is that the latter cannot assume a null value. This characteristic is not easily articulated in the language itself, unlike in Kotlin. As a result, the Valhalla team is contemplating the introduction of a new ‘nullness’ tag for objects.

If you haven’t stayed current with Valhalla’s progress, you might have overlooked the suggestion from the developers to introduce the .val and .ref suffixes at one point. These were intended to indicate whether you wished to utilize an object as a value or a reference. This was the most concerning of the proposed modifications for me, as I feared it would complicate the syntax. However, it appears that we may be able to do without them for now. The ongoing development of the entire project has minimized the disparities between value types and objects to two key differences – the presence of a default value (like 0 for int) and support for nullability.

Unlike Kotlin, expressing this property is not straightforward in the language itself. Hence, the creators of Valhalla are contemplating the introduction of a new ‘nullness’ tag for objects. They are proposing the addition of two markers when defining a type – ! signifies that nullness is not permitted and ? denotes that the object can be nullable. To summarize:

Foo? means this type contains null values in its setFoo! means this type does not contain null values in its setFoo means 🤷♂️ – in other words, the undefined state of nullness

In due course, developers will strive for the version lacking the suitable annotation to adopt the traits of annotated variants. At this stage, it appears that Foo without annotation will mostly be considered as non-nullable.

Details:

- Will Valhalla bring better nulls to Java? – JVM Weekly #33

- Exploring the Newest Updates of Project Leyden, Valhalla & Hermes: JVM Weekly vol. 54

The year I watched the JVM Language Summit with blazing eyes

JVMLS is an annual event that brings together JVM development experts and engineers to discuss current enhancements and the future of the platform. This year, we’ve been able to delve into a wealth of content from the event, providing us with a peek into the inner workings of numerous projects that typically stay hidden.

During JVMLS, significant attention was given to the Leyden Project. This project introduces a novel method for optimizing Java code, which is based on the concept of ‘shifting and constraining’ computation. This project aims to facilitate the development of so-called capacitors, which are designed to analyze an application’s behavior in real-time and modify it to run more efficiently. As a result, Java applications can be optimized even before they are launched. This reduces the ‘warm-up’ time and allows for extended periods of peak performance. At JVMLS, we had the opportunity to examine the outcomes of the work for the first time. The ApplicationModel, the fundamental model interface, and the implementation patterning were showcased.

Another interesting project is Project Babylon, which aims to provide more advanced capabilities than the existing reflection mechanisms in Java, by introducing so-called ‘code reflection’. Unlike standard reflection, which works at runtime, this one is intended to allow developers to analyse and manipulate source code at compile time as well, allowing code to be dynamically generated for different runtime environments, similar to LINQ in C#.

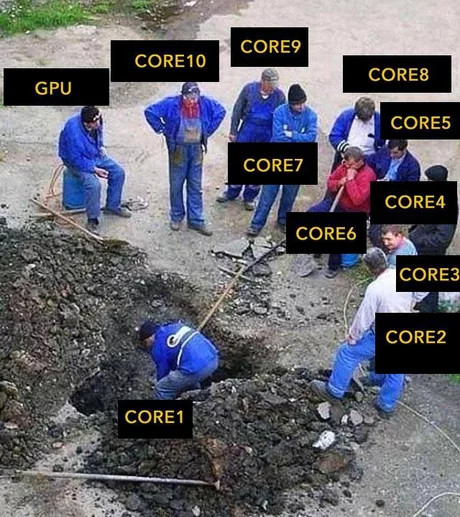

The Panama project is not slowing down either, and after embracing the themes of Foreign Memory and streamlining work with programs written in ‘native’ languages, it now aims to make it easier to integrate Java with non-traditional runtime environments such as GPUs. With Panama, developers will have better control over the transfer of data between different types of memory and the operation of the Garbage Collector, making it easier to work with frameworks related to machine learning and artificial intelligence. Its developers are heavily collaborating and sharing experience with TornadoVM, a platform that allows Java applications to execute on a variety of hardware platforms, including GPUs, FPGAs and multi-core systems.

TornadoVM utilizes the JIT compiler of GraalVM, enabling the transformation of Java code into native machine code. This often results in a significant boost in application performance. Additionally, they provide beneficial abstractions that simplify the process of creating portable code. This year, specifically in December, marked the launch of its version 1.0, paving the way for broader adoption.

I am definitely looking forward to JVMLS 2024.

Details:

- Panama, OpenCL and TornadoVM: Java’s entry into the GPU world – JVM Weekly vol. 55

- Exploring the Newest Updates of Project Leyden, Valhalla & Hermes: JVM Weekly vol. 54

- Project Babylon: Chance for LINQ (and more) in Java – JVM Weekly vol. 56

The year Kotlin 2.0 was announced

There are big changes coming to the world of Kotlin – at least in terms of numbering, although it won’t stop there.

The developers have announced that the 1.9 release will be the last of the 1.x line. Version 1.10 will not appear, instead we will jump straight to the 2.0 release. This is due to the fact that it is for this version that the long-awaited K2 compiler is planned to be released – “one to rule them all” and to provide a common infrastructure for all potential language targets. This strategy will ensure that its developers won’t have to recreate the same features for the JVM, WebAssembly, or Android each time, which is predicted to greatly accelerate Kotlin’s development. he change is thus large enough that it was deemed appropriate to crown it appropriately by bumping up the version.

The shift to a major version of a language can significantly impact the language’s ecosystem. However, JetBrains assures a stable migration process in the case of Kotlin. This stability is expected to be achieved through two elements. First, the changes prompting the number-crunching are happening behind the scenes, and the developers intentionally do not intend to introduce any new features in the language syntax itself in the upcoming release – these are reserved for the 2.x releases, which will follow the successful transition to K2 (some of which have already been announced). Moreover, JetBrains’ control over both Kotlin and its status as a primary provider of tools for it is advantageous. This is because it enables the entire operation to proceed more smoothly when most of the crucial tooling can be developed concurrently with the language.

Details:

- Will Valhalla bring better nulls to Java? – JVM Weekly #33

- Play Framework is reborn like a phoenix from the ashes…. and gets rid of Akka – JVM Weekly vol. 60

- TLDW: Opinionated Wrap-up of KotlinConf 2023 Keynote – JVM Weekly vol. 40

The year Roman Elizarov left Kotlin Team

At the year’s conclusion, the Kotlin universe received a significant announcement. Roman Elizarov, the project’s leader, declared his exit from JetBrains due to personal circumstances, thereby concluding his contributions to the language. He bid farewell through a sequence of tweets, expressing his thankfulness for the chance to contribute to Kotlin and highlighting his profound admiration for the Kotlin community.

We’ve also discovered who will be guiding the language’s future direction – Mikhail Zarechenskiy, who previously worked behind the scenes at JetBrains, is set to become Kotlin’s lead designer. Significant team changes also include Hadi Hariri, who you might recognize as the co-host of the Talking Kotlin podcast – he will now assume additional responsibilities beyond promotional activities and his participation in KotlinConf. Additionally, the other Talking Kotlin host, Sebastian Aigner, will take on a more significant role in the Kotlin Foundation, especially in backing initiatives of the broader Kotlin ecosystem. Egor Tolstoy will continue to lead the team from the Product Management perspective.

Details:

The year we saw a rash of interesting new releases

Frameworks

Spring Framework 6.1 and Spring Boot 3.2

In the 6.1 version of Spring Framework, two significant new features are added: Virtual Threads and Project CRAC. Just to jog your memory, Virtual Threads is a fresh concept in Java, brought in with JDK 21 under the umbrella of Project Loom. This concept alters the way concurrency is handled. In contrast to conventional threads that are overseen by the operating system, virtual threads are controlled by the JVM. This allows for the creation of a vast number of threads without the overhead that comes with traditional threads.

The Checkpoint/Restore in Application Continuation (CRAC) project on the other hand, allows the state of the running JVM to be saved and restored, reducing application start-up time. This solution reduces the ‘cold start’ problem of Java applications, which is particularly important for cloud and serverless applications. Spring integrates with CRAC, enabling controlled checkpoints and restoration of JVM state.

Additionally, Spring Boot 3.2.0 brings enhanced support for Apache Pulsar and the RestClient interface from Spring Framework 6.1, offering a ready-to-use HTTP client for managing REST requests. It also includes support for JdbcClient and automatic Correlation Id logging when utilizing Micrometer. Furthermore, Spring Boot 3.2.0 simplifies the process of creating Docker images by employing the Cloud-Native Buildpacks standard.

Details:

Quarkus 3.0

Quarkus 3.0 brings an improved Dev UI that makes application management and configuration easier. The Dev Mode feature of Quarkus now supports Continuous Testing, thereby boosting developer productivity and comfort.

An incredibly fascinating new addition is Mutiny2, a library developed by the Quarkus team for reactive and asynchronous programming. The goal of Mutiny2 is to make reactive code easier to understand, providing a more user-friendly method compared to other libraries like RxJava and Project Reactor. It also signifies the end of a period, as the latest version of Mutiny transitions from its unique implementation of the Reactive Streams API to the standard java.util.concurrent.Flow.

However, although Quarkus 3.0 brings many valuable changes, full support for virtual threads, which was promised, is missing.

Details:

Micronaut 4.0

Micronaut Framework 4.0 drops support for Java versions older than JDK 17, which has allowed the API to adapt to new language syntaxes such as Java Records, Sealed Classes, Switch Expressions, Text Blocks or Pattern Matching for instanceof. The Micronaut HTTP client has been upgraded to a version based on the Java HTTP client introduced in JDK 11, improving performance. Micronaut 4.0 has also been optimised to take advantage of GraalVM, making it easier to compile Micronaut applications that depend on other libraries.

Also, the new release introduces better cloud support, a modular architecture and initial support for VirtualThreads. In addition, Micronaut Serialisation has become the default module offering efficient and secure JSON serialisation/deserialisation APIs.

Details:

Helidon 4.0

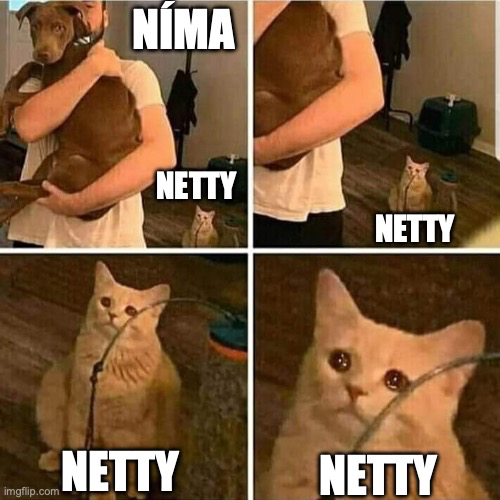

Helidon 4 became the world’s first microservices framework based on virtual threads. Indeed, the main change coming with the release was the replacement of Netta with a new server implementation called Níma. Níma is designed to take full advantage of Java 21 virtual threads, allowing each request to run on a dedicated virtual thread. This simplifies the process of performing blocking operations and provides a high level of concurrency, thus eliminating the need for complex asynchronous code, which improves performance, especially (according to the developers themselves) in Helidon MP. It also means that we have lived to see the first framework that requires Java 21 to run.

Helidon SE, which is the core set of APIs for Helidon, has also undergone quite a transformation. The adoption of virtual threads has enabled a shift from asynchronous APIs to blocking APIs (until I surprise myself writing that sentence). This change simplifies the code, making it easier to write, maintain and understand – giving us a foretaste of what lies ahead for the whole ecosystem probably in the future. Those using Helidon 3 SE will unfortunately have to adapt their code significantly to the updated APIs. While this may require some initial effort, the benefits in terms of increased performance and code simplicity seem well worth it.

Details:

Dropwizard 3.0 and 4.0

I remember the days when I used Dropwizard, when this hype-promising framework was at the very peak of popularity. Despite the initial enthusiasm, DropWizard began to lose steam and the release of versions 3.0 and 4.0 went unnoticed in the technology world.

The reason for two versions at once is simple: Dropwizard 3.0 is based on Java EE and the javax. namespace, so migration from Dropwizard 2.x to version 3.0 should be minimal for many projects. Dropwizard 4.0, on the other hand, relies on Jakarta EE dependencies and the jakarta. namespace, which may involve more work to migrate from Dropwizard 2.x to version 4.x.

The two versions also share some common changes – raising the required version of Java to 11, introducing a package structure based on JPMS (I have a feeling it was one of the first tools to take this standard seriously), updating Jetty to version 10.0.x (which also requires a minimum of Java 11), updating Apache HttpClient to version 5.x, and removing support for JUnit 4.x (moved to dropwizard-testing-junit4). Additionally, only Dropwizard 4.0 got support for Hibernate 6.0, requiring a move to jakarta.

Details:

Play Framework 2.9 and 3.0

Another double release – Play Framework has made a comeback with new releases 2.9 and 3.0. Once viewed as the primary rival of Spring, Play has revived after being given back to the community by Lightbend and following a period of stagnation and controversy over the new Akka licence, which it utilized internally.

So release 3.0 brings a migration from Akka to Apache Pekko, a fork of Akka 2.6.x. Play 3.0 now uses Pekko and its HTTP components. However, for applications strongly integrated with Akka, this change may require some migration efforts. Therefore, Play 2.9, which continues to use Akka and Akka HTTP, has also been released at the same time.

In addition to the above, Play 2.9 and 3.0 focus on support for updated programming languages, Scala 3 compatibility, and alignment with newer versions of libraries such as Akka HTTP 10.2, Guice 6.0.0 and Jackson 2.14. It is worth noting that Play Framework 3.0 no longer supports older versions of Java, and the minimum version required is Java 11.

Details:

Grails 6

Grails 6.0, the latest release of the Groovy web application development framework, introduces several significant improvements. The most important new feature is the Grails Forge UI, which enables developers to more efficiently manage projects written in the framework. The new interface provides a simplified project initiation process, intuitive navigation, real-time validation, visual dependency management and responsive design.

Grails 6.0 has also strengthened integration with Micronaut, making it easier to use Micronaut beans in Grails components and using the Micronaut HTTP client to interact more seamlessly with the REST API. It is worth noting that Grails 6.0 also raises the minimum JDK requirements to version 11.

Details:

WildFly 30

Although the official recommendation is still JDK 17 or 11, a significant part of WildFly 30 release has been dedicated to integration with Java SE 21. The latest version passes both Jakarta EE 10 Core Profile and Microprofile certification tests on this release. With the increasing focus on JDK 21, it is anticipated that WildFly 30 may be the last to support JDK 11.

Additionally, with the arrival of WildFly 30 came the change of licence from Lesser General Public License 2.1 to Apache Software License 2.0, thus summarising a long-standing path. The move from Lesser General Public License 2.1 (LGPL 2.1) to Apache Software License 2.0 (ASL 2.0) marks the transition from a ‘weak’ copyleft licence to a more permissive one.

Details:

Vaadin Hilla 2.0

About a year ago, Vaadin introduced Hille, a novel web framework that allows Java developers to construct comprehensive applications. This framework utilizes a Spring Boot-based backend and a frontend scripted in TypeScript. Formerly known as Vaadin Fusion, Hille provides a uniform configuration for Java and TypeScript, an extensive collection of UI components.

The latest version 2.0 adds an improved TypeScript generator, support for WebSockets, compatibility with GraalVM Native Image, simplified theme creation and an SSO Kit tool for rapid implementation of single sign-on (SSO). This version uses Spring Boot 3, Java 17 and Jakarta EE 10.

Details:

Tooling

AI Assistant in IntelliJ Idea.

The release of IntelliJ IDEA 2023.3 has brought the freely available AI Assistant out of the testing phase. It offers improved code generation directly in the editor, context-aware chat AI for project-related queries, and project-aware AI actions that use extended context to produce more comprehensive results. Imagine such a ChatGPT built into your IDE, with some additional enhancements by being tightly integrated into the IDE itself – the so-called AI Actions.

Gradle 8.0

The Gradle 8.x branch is primarily work on the Kotlin DSL, an alternative syntax to the traditional Groovy DSL, providing better syntax prompting for editing, which has become the default variant since this release. With Gradle 8.0, script compilation has been improved by introducing an interpreter for declarative plugin blocks {} in .gradle.kts scripts, resulting in a gain of 20%, bringing Kotlin DSL processing time closer to Gradle DSL.

But when it comes to Gradle, even more interesting things have been shown by JetBrains.

Amper by JetBrains

Programmers are experiencing changing trends in managing build and continuous integration processes. In recent years (or perhaps even a decade), CI/CD solutions like Github Actions or TravisCI, based on declarative configurations in YAML, have become popular. In the Java ecosystem, despite the availability of Maven with its XML, many developers have moved to Gradle because of its greater flexibility, although this sometimes leads to complex scripting.

This trend points to ongoing challenges in managing complex projects, particularly at JetBrains, where Kotlin Multiplatform has highlighted Gradle issues. In response to these challenges, JetBrains started Project Amper, aiming to simplify Gradle configuration by using YAML-based configuration. Amper currently supports Kotlin and Kotlin Multiplatform, as well as Java and Swift. This initiative aims to make Gradle more accessible and less complex.

Official Java Extension for Visual Studio Code from Oracle

Oracle has released an official Java extension for the Visual Studio Code environment, a significant step on their part in recognising VSCode as an alternative to traditional development environments. The extension provides features such as auto-completion, error highlighting, debugging support and integration with Gradle and Maven projects. An important feature is the use of a Language Server Protocol-based language server from NetBeans (you can read a bit about the LSP itself down below).

Details:

Azul Zulu JDK 17 with CRaC support

Azul has launched versions of its OpenJDK 17 – Zulu – that come with integrated support for the CRaC API. As previously discussed in relation to Spring, the CRaC API enables the creation of ‘checkpoints’ during the operation of your application, facilitating the preservation of your entire runtime environment’s state. This is akin to the Save State function in emulators, where the whole memory state is stored on the disk and can be restored at a later time. Azul is the pioneer vendor to provide commercial backing for this technology, which might pique the interest of early adopters of the new Spring Framework 6.1 features.

Details:

Liberica JDK Performance Edition

BellSoft has launched Liberica JDK Performance Edition, a modified version of JDK 11 that integrates performance fixes from JDK 17. This solution enables companies using JDK 11 to increase application performance by 10-15% without code changes. The Liberica JDK Performance Edition is available to Liberica JDK subscribers from 1 August at no additional charge and comes with other tools from BellSoft.

Details:

New interesting solutions

Fury

Fury, developed by Ant Group, is a new library for serialisation, It combines the performance of static serialisation with the flexibility of dynamic serialisation, which can be used when high throughput is needed in bulk data transfer. It offers full compatibility with existing Java solutions and uses not only advanced serialisation techniques, but also SIMD operations from the Vector API and the Zero-Copy approach, minimising latency during data transfer. Fury also uses a JIT compiler, which generates optimised serialisation code in real-time.

Details:

EclipseStore 1.0

MicroStream a solution that provides data persistence in a ‘databaseless’ way native to Java, tailored for microservices and serverless environments. It allows Java object graphs to be stored in memory without size or complexity constraints, while ensuring full transaction consistency. MicroStream in 2023 became an official Eclipse Foundation project called EclipseStore 1.0, and all new features will now be released under it. This does not mean the end of development, however; in fact, the MicroStream team will continue to actively work on the project. Importantly, EclipseStore is expected to remain a key part of MicroStream’s commercial strategy, forming the basis for the MicroStream Cluster and MicroStream Enterprise offerings.

Details:

JoularJX 2.0

JoularJX 2.0 is a tool that aims to enable developers to accurately monitor and analyse energy consumption on a variety of devices and operating systems. To do this, it uses advanced models to help estimate the energy consumption of key hardware components, such as the processor and memory, and provides detailed reports and visualisations of consumption. The tool is part of the growing demand for sustainable software practices and energy efficiency in the IT industry.

A key new feature in version 2.0 is the ability to track power and energy consumption at the individual method level in the code. This allows developers to gain more detailed insight into which parts of their application are the most energy-intensive

Details:

New in the world of Kotlin and Scala.

Well, let’s take another look at Java’s relatives, who always fry for being superior 😉

Kotlin Mulitplatform Stable

Kotlin Multiplatform (KMP) has reached stability and production readiness, a significant step for mobile developers. The technology allows code to be shared between different platforms, blurring the boundaries between cross-platform and native development. It allows for integrations between different environments, such as Android, iOS and also server-side applications, although in the latter case the synergies are somewhat less. The power of KMP is demonstrated by libraries such as Compose Multiplatform.

Details:

AWS SDK for Kotlin

At this year’s Amazon Web Services re:Invent conference in Las Vegas, the release of the AWS SDK for Kotlin was announced. The SDK has been designed with the idiomatic features of the language in mind, including specialised DSLs and support for asynchronous AWS service calls using coroutines. The current version of the SDK allows use in server-side applications and Android API version 24+, but additional support for other platforms, including Kotlin/Native, is planned.

Details:

Scala Metals 1.0

Language Server Protocol (LSP) is an open JSON-RPC-based protocol that allows interaction between IDE editors and language servers that offer language-specific features such as autocomplete or definition transition. With LSP, a language server once created can be used in multiple tools, eliminating the need to do the same work over and over again.

An important achievement in this area is the release of Metals 1.0, a mature language server for Scala. Its development is the result of collaboration between many members of the Scala community. Metals 1.0, code-named Silver, offers additional features such as support for multi-root projects, running Scalafix rules and better support for the Scala CLI, in addition to the standard LSP features. It supports the latest versions of Scala (Scala 3.3.0, Scala 2.12, Scala 2.13).

And finally, the year the Java ecosystem jumped on the AI-related hype wagon.

Well, because such an amount of news was probably absolutely no one expected. And these are only the more interesting ones anyway.

langchain4j

langchain4j is a Java wrapper for LangChain, a framework developed by Harrison Chase that focuses on Large Language Models (LLMs) like GPT-3, GPT-3.5, and GPT-4 from OpenAI. This framework was launched in late October 2022 and is designed more for building production applications based on LLMs rather than just for experiments. LangChain facilitates the development of various components, including templates for different types of prompts, integration with various LLM models, and agents that use LLMs for decision-making. It also introduces the concepts of short-term and long-term memory.

Semantic Kernel for Java

Microsoft also has its LangChain-like SDK called Semantic Kernel (SK), which enables the integration of large AI language models (LLMs) into conventional programming languages, including Java. This SDK combines natural language, traditional code and embedding-based memory to create an extensible programming model. In practice, the Semantic Kernel for Java allows developers to seamlessly integrate AI services, such as Azure OpenAI, into their applications using a so-called skill-based model. Developers can also create intelligent plans using Planners, combining the ability to perform complex actions with generative AI.

Rust-written JVM and Bytecode Transpiler: A Masterclass in Learning-by-Doing – JVM Weekly vol. 51

Spring AI

Spring AI is a new project aiming to create a bridge between advanced AI models (especially with GPT variants) and typical Spring patterns in application development. The Spring AI project draws inspiration from LangChain, LlamaIndex and Semantic Kernel, aiming to offer Spring developers a similar AI experience. It introduces a common API for interacting with models, develops prompts that are key to communicating with AI, and offers parsers to convert their responses into plain POJOs. In addition, recognising the risks associated with managing sensitive data in LLMs, it enables integration with vector databases, making them easier to use without re-training models.

quarkus-langchain4j

Extension quarkus-langchain4j 0.1 represented a significant step towards a more intuitive and efficient use of artificial intelligence in software development. Working with Dmytro Liubarskyi and the LangChain4j team, Quarkus focused the extension on integrating LLMs into applications written in this particular framework.

JVector

Vector databases play an important role in modern AI applications, facilitating the expansion of the knowledge base of models and the delivery of accurate answers, minimising the risk of errors or AI ‘hallucinations’. JVector, written in pure Java, is an embeddable vector search engine that powers DataStax Astra and integrates with Apache Cassandra. It offers significant improvements over vector search in Apache Lucene, using the advanced DiskANN algorithm, which is more than 10 times faster than Lucene for large datasets. It is designed for simple integration while maintaining high performance, using the Vector API and SIMD instructions from the Panama project.

Jlama

Jlama is a processing engine for LLM, compatible with models such as Llama, Llama2 and GPT-2 (which has also been released), as well as the Huggingface SafeTensors model format. It requires Java 20 to run, as it uses the aforementioned Vector API to enable the fast vector calculations needed when inferring from models.

llama2.java

llama2.java is a direct port of llama2.scala, which in turn is based on llama2.c created by Andrej Karpathy. The project aims to serve as a testbed for new language features, but also to compare the performance of GraalVM against the C version. Relevant performance tests are available in the repository. To use this library, you need JDK 20+, along with support for the MemorySegment and Vector API.

Amazon Q Code Transformation

Amazon Q, the AI assistant available for Visual Studio Code and IntelliJ for users with a CodeWhisperer Professional licence, introduces a new feature called Code Transformation. This feature allows for intelligent, mass refactoring, automating repetitive programming tasks. Amazon Q is currently focused on upgrading Java applications, enabling code to be transformed from Java 8 or Java 11 to the latest LTS, Java 17. Amazon Q is currently in Preview, which means some limitations – for example, it only supports Maven-based projects.

SD4J (Stable Diffusion in Java)

Oracle Open Source has released the SD4J project, which is an implementation of Stable Diffusion inference running on the ONNX Runtime, written in Java. It is a modified version of the C# implementation, equipped with a GUI for repetitive image generation and support for negative text inputs. The project aims to demonstrate the use of the ONNX Runtime in Java and best practices related to performance in the ONNX Runtime.

Hopefully, when it comes to the JVM ecosystem, 2024 will be just as fascinating as 2023.

PS: If you’ve made it all the way here, it means you probably enjoyed it 🥂 So if you know anyone who might find JVM Weekly useful – drop them this newsletter in early 2024!