Today’s a bit out of the ordinary. Instead of memes, I’ve got a collection of video clips for you. Interestingly, I came across some truly captivating ones to share.

1. Panama, OpenCL and TornadoVM: Java’s entry into the GPU world.

Today, we will begin with the box of chocolates we got, which I will be slowly unveiling in upcoming editions. This is a process I’ve already initiated by sharing details about the Leyden Project a week ago.

JVM Language Summit (JVMLS) is a yearly gathering that unites the best of the JVM universe: language creators, compiler and tooling developers, and engineers of the wider ‘runtime environment’. They come together to discuss current enhancements and future ideas for JVM development. Despite the guest list being relatively specific, the communication is quite transparent, with more and more lectures gradually becoming available. This provides us with a fascinating look into their work, including behind-the-scenes aspects. I don’t have the room to detail all of them here, but I plan to cover more topics in future editions, slowly reviewing the individual recordings myself. As previously mentioned, we’ve already discussed Project Leyden in a past edition. Today, we’re tackling a topic I’m particularly interested in, but rarely get the chance to talk about – adapting the JVM platform to work with the GPU.

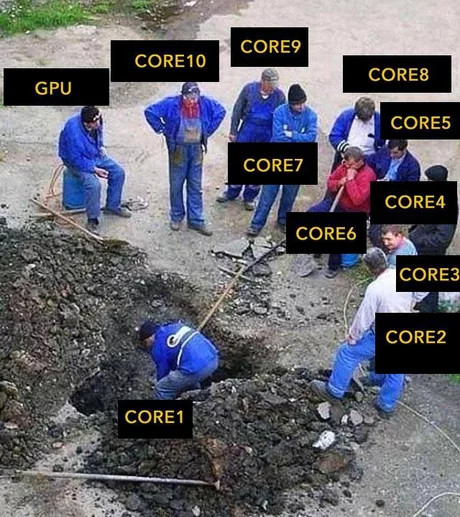

In his talk titled Java and GPU … are we nearly there yet?, Gary Frost, an Architect at Oracle, shared insights on the merging of Java with GPUs. He focused on the hurdles and potential new development opportunities that arise from supporting GPU work. To understand the basics of hardware, unlike traditional CPUs that have dozens of cores, a single GPU can provide thousands of cores (based on a core-per-pixel model). This makes the GPU naturally equipped to handle millions of simultaneous tasks. Initially, these cores were primarily used for pixel shading in graphics rendering. However, when developers recognized the immense computational power of the GPU, they started using it for various other tasks, including Machine Learning and Artificial Intelligence. The strategies used so far are explained in an easy-to-understand manner in the article Programming the GPU in Java. However, this article was published in January 2020, and the platform has since evolved.

Frost’s presentation highlights the significance of Project Panama. He acknowledges past difficulties, such as the constraints of the JVM itself and attempts to mask the intricacy of GPU programming with Java APIs (because it always works ekh, ekh… RPC). Frost suggests a more transparent method. He presents the idea of a ‘heterogeneous acceleration toolkit’, which is specifically based on Project Panama, and would employ something known as NDRange. This framework originates from the OpenCL framework, designed to develop programs that operate on heterogeneous platforms composed of various hardware, providing abstractions over different kinds of processing. An NDRange is essentially a set of work units that can be arbitrarily allocated over the available hardware resources and processed, and can be grouped into work-groups to enhance the performance of the entire process. This abstraction is highly adaptable, enabling developers to utilize the power of multiple computing units for a range of tasks, hence the suggestion to incorporate it into the JVM.

In the second part of the presentation, Frost spent considerable time discussing TornadoVM, which had its own exclusive talk: From CPU to GPU and FPGAs: Supercharging Java Applications with TornadoVM, delivered by Juan Fumero, the creator of the project.

TornadoVM is a platform specifically designed to allow Java applications to run efficiently on various hardware platforms, such as GPUs, FPGAs, and multi-core systems. It achieves this by converting Java bytecode into OpenCL, ensuring that Java applications can utilize the computational power of specialized hardware without requiring hardware-specific modifications. This provides developers with a consistent and efficient environment for their applications. TornadoVM is integrated with GraalVM and utilizes its JIT compiler, which is capable of transforming Java bytecode into native machine code. TornadoVM can also use GraalVM’s JIT compiler in its translation processes, gaining additional advantages from the advanced optimizations provided by GraalVM.

The presentation largely revolved around discussing the future strategies and development path for TornadoVM. The team is considering incorporating ready-made libraries from industry leaders like NVIDIA, AMD, and Intel to improve TornadoVM’s user-friendliness and performance. In this regard, ‘library tasks’, designed for easier execution of native function calls, are a significant new feature. In line with Frost’s presentation theme, Panama integration is viewed as a potential answer to issues such as data transfer between heap and off-heap memory, and improved management of Garbage Collector operation to support the patterns of platform’s usage by AI frameworks. There have also been suggestions to investigate sharing options of the Java heap between CPU and GPU. Also, a commitment to collaborate closely with the Grail Core Team has been declared.

This is just an appetizer thou’ – more presentations are still to come, and I think somewhere around JDK 21 it will be worth reviewing the material on virtual threads – which, as you may have guessed, was one of the important topics at the event.

Spring I/O brings the announcement of Spring AI

Staying on the topic of things I’ll take time to unpack, Spring I/O happened last week. Regrettably, we don’t have a lot of content available yet (other than the standard recap by Josh Long, Spring Dev Advocate), but some information has already seeped into public awareness, including Spring AI. Let’s delve into what’s behind the name.

Spring AI is a fresh initiative launched by Mark Pollack, aiming to act as a conduit between the sophisticated features of pre-trained AI models and the conventional application writing patterns of Spring. A key aspect of this integration is the effortless interaction with models, particularly GPT variants.

Java’s role in the artificial intelligence market has traditionally been small, largely due to the prevalence of C/C++ algorithms (which Panama is intended to address) and Python’s dominance in the ML/AI ecosystem. However, the creation of publicly accessible generative models that enable interaction with pre-trained models via HTTP (like GPT) has significantly lessened the dependency on specific languages. This shift is opening up opportunities for languages like Java to gain more prominence in the artificial intelligence sphere. Taking cues from libraries such as LangChain, LlamaIndex, and Semantic Kernel, the Spring AI project aims to provide a comparable AI experience for Spring developers. This also aligns well with the trend highlighted in the previous section, complementing the running of models on the JVM itself.

The Spring AI project introduces many features similar to those in LangChain. These key features include a common API for AI model interactions and the development of ‘prompts’, which are crucial for AI model communication. Parsers are available to transform raw AI responses into structured POJO-type formats. The project also highlights the significance of handling sensitive data in LLMs, providing methods to utilize it without the need to incorporate it in the model retraining process. This is achieved by enabling integration with vector databases, simplifying the process of working with AI models.

The project emphasizes the idea of ‘Chains’ to create a series of AI interactions from a single input. Other crucial elements are ‘Memory’, which is used to remember past interactions, and ‘Agents’ that employ AI models to dynamically retrieve data and produce responses. This all appears extremely intriguing, and I am certainly going to explore it, especially since I recently experimented with Semantic Kernel.

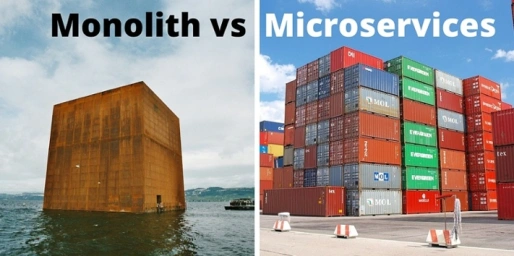

A little while during Spring I/O (actually a bit earlier), a stable version of another unique yet intriguing Spring project was unveiled. This is due to the increasing demand in the developer community for a comeback of the monolith – albeit a somewhat revamped version. Advocates of this method argue that with the current tools and effective modularisation, we can develop a codebase that possesses many of the benefits of microservices with surprisingly fewer drawbacks. For those interested, I recommend the concept of the Majestic Monolith by DHH, as well as the fascinating series Modular Monolith: A Primer.

Well, how does this relate to the topic of Spring? It recently launched the Spring Modulith 1.0, which aims to usher Spring into the era of the modular monolith. Spring-Modulith is an enhancement of the existing Moduliths project (which it is set to replace), but it’s even more closely integrated with Spring itself. What’s particularly intriguing is how it operates – rather than heavily interfering with the build process, it uses integration tests to verify the project. In this way, it functions similarly to ArchUnit, a library designed specifically to check dependencies between individual modules, whose assertions are, incidentally, compatible with it. The beauty of Modulith lies in its ability to pre-configure the relevant verification rules (including those of ArchUnit) thanks to its familiar runtime environment (Spring Boot 3.0 applications). This makes it easy for us to test whether any architectural spaghetti has slipped through Code Review, while still at the application build level.

3. Release Radar

Grails 6

This time, the announcement is a bit delayed, as Grails 6.0 had its debut some time ago. However, as the saying goes – it’s better late than never. Grails is a web application development framework that uses Groovy and is based on the once immensely popular Rails. Although the enthusiasm for this framework and Ruby in general has somewhat diminished, resulting in fewer new projects being initiated in Grails, it’s still worthwhile to explore what the new version of the framework has to offer.

The highlight of this release is the introduction of the new Grails Forge UI. This feature allows developers to manage projects written in the framework more effectively. It provides a simplified process for starting projects, intuitive navigation, real-time validation, visual dependency management, and a responsive design. In addition, Grails has enhanced its relationship with Micronaut by making it easier to use Micronaut beans in Grails components and utilizing Micronaut’s HTTP client for simpler interactions with the REST API. Grails 6 has also set JDK 11 as the minimum version, enabling the framework to utilize the new features in its internals. However, these are not the last changes – plans are already in progress to ensure a seamless transition to Java 17 in the forthcoming major release.

Testcontainers Desktop Free Application

The Testcontainers project has consistently grappled with the issue of “how to ensure tests start quickly” since its beginning. Numerous concepts have been devised over time for different features, including persistent ports and reusable stable containers. While some of these features have seen limited implementation, Testcontainers Cloud has so far been the most effective solution to this problem. However, for most of us who still prefer running tests locally and are not willing to pay for the privilege, Testcontainers Desktop – fully local itertion of Testcontainers Cloud Desktop – has now been developed.

The launched version provides functionalities like establishing fixed ports, the option to freeze containers for debugging purposes, and offers abstractions over different containerisation runtimes, including my darling Podman. It also serves as a form of freemium version, as those who opt to test it receive access to a 300-minute trial version of Testcontainers Cloud.

If you’re curious about its practical application, the subsequent post Joyful Quarkus Application Development using Testcontainers Desktop offers an excellent guide on using the new feature, taking Quarkus as an example.

Camel 4.0

For those who haven’t engaged with the project, Apache Camel is a robust enterprise software, rooted in a group of patterns referred to as Enterprise Integration Patterns. You should anticipate a significant amount of XML, even though Camel has also undergone substantial upgrades since I last used it. It simplifies the integration of diverse systems, enabling them to share data effectively without the requirement for dedicated, repetitive integration code. Additionally, it accommodates various transport and data formats, making it a fascinating tool if your business is transitioning into the Enterprise world.

Apache Camel 4.0 primarily involves the transition from javax to jakarta, which you may be familiar with. It adapts to the updates of Jakarta EE 10 and increases the minimum Java version to JDK 17. Those who can’t upgrade will have to remain on Camel 3.x. Additionally, it removes the compulsory dependencies on JAXB. The update is also designed to integrate smoothly with Spring Framework 6, Spring Boot 3, and Quarkus 3. This release combines Camel Core and Camel Spring Boot, indicating the direction of development, and incorporates Camel Karaf as a subcomponent of Apache Karaf. However, there are a few deprecations, such as in camel-cdi and support for JUnit 4.

Gradle 8.3

A new version of Gradle 8.3 has been released, introducing a bunch of improvements in its latest update. A notable change is the optimization of Java compilation, with the use of deamons that warm up after a few uses, which can lead to up to a 30% reduction in compilation time. On the configuration side, the configuration cache has been improved to cache the results of the configuration phase, improving compilation performance.

Another enhancement includes complete (at last) compatibility with Java 20. In the past, although Gradle 8.1 simplified the process of compiling and testing with Java 20, it didn’t facilitate running Gradle on this JDK version. This resulted in some projects needing an older JDK version solely for the build tool. The update also brings advancements to the CodeNarc plugin and better support for the latest Maven tools.

Fans of Kotlin will also find joy in this version. Kotlin DSL has been further enhanced, offering features like auto-completion and easy access to documentation. Since this release, Kotlin DSL is the default for the new Gradle projects. Several updates have been implemented, such as support for the K2 compiler and the utilization of the embeddedKotlin() function for plugins.

Compose Mulitplatform 1.5.0

Finally, we have Kotlin Multiplatform in the UI edition! In other words, their popular Compose Framework.

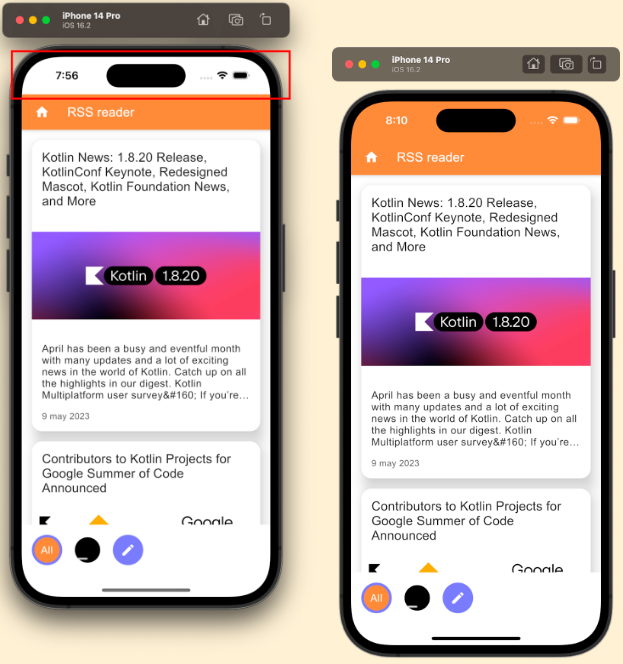

Compose Multiplatform 1.5.0 has brought stability to the Desktop version, making UI testing easier and improving interoperability with Swing. The iOS version has been released as an alpha, featuring significant enhancements like improved scrolling, Dynamic Type support, and superior support for high refresh rate displays. However, those interested in the web version are advised to wait, as it is still experimental. The latest release is built on Jetpack Compose 1.5 and incorporates Material Design 3 version 1.1, introducing several new components, including DataPicker. New features also include code consistency for the Dialog, Popup and WindowInsets components.