This time, the edition will be entirely about video… well, almost entirely.

And I’ll start with my own personal find of recent weeks. Tech Talks Weekly is a new substack newsletter, aggregating talks from technology conferences. I’m very fond of such sources because, although my “To Watch” list on YouTube has grown a bit lately (still so far I feel like I’m the winner in this duel), my internal FOMO is really enjoying Tech Talks Weekly. At last, I can peruse newly published talks in a more batch way, without having to subscribe to all the conference channels only to manually hide most of the videos later to get a YouTube ‘zero-inbox’.

But the fact that it is the solution to a set of my personal problems (mostly with myself) probably wouldn’t be reason enough to share with you Tech Talks Weekly, but the Newsletter has put together a very interesting ‘special’ edition which compiled a list of all the (starred, more on that in a moment) Java talks of 2023 from notable conferences such as Devoxx, GOTO, Spring I/O and others, sorting it by number of views. You probably won’t live enough to see it all (sorry for causing you existential crisis), but just scanning the headlines (which I don’t encourage you to do, but I recommend you turn on some Venkat Subramanian for lunch sometime) will give you an idea of what the community was discussing in 2023. So we have a lot of Spring, a lot of Virtual Threads, a lot of GraalVM, but also some less obvious topics, like observability issues or Johannes Bechberger showing how to write your own Java protofiler in less than 300 lines (my personal favourite of the list). Generally, many of the names at the top of the list repeat, but I recommend looking at the bottom of the list too – you’ll probably find a lot of interesting, non-obvious and undiscovered gems there.

The list doesn’t encompass all presentations since the author focused on a selection of conferences, particularly the more ‘generic’ ones. However, the discussion in the comments indicates that the author also gained from publishing this list, as the community provided him with additional channels to explore.

As we are talking about Fosdem, a mere week ago I shared a video about the future of Virtual Threads and now I have another:

The The State of OpenJDK speech is now available on the conference channel. The whole thing is extremely informative allowing us to learn many of the details of working on the project ‘behind the scenes’, such as the impact of the COVID-19 pandemic on OpenJDK development during the creation of JDK 14. We can also learn how many decisions had to be made in the evolution of the OpenJDK release model and how many changes occurred internally during the transition from a ‘major’ release model to a more flexible ‘tip and tail’ model that prioritises feature readiness and stability. Despite the community’s initial scepticism about the move to a six-month cycle, it was ultimately successful, bringing benefits in terms of workload management, release quality and update frequency. There is also a lot of coverage of the contributions of both the community and large companies, or the role that GitHub adoption (even in mirror form) has had on community activism. Overall, this is strongly affirmative stuff, quite interesting for anyone who would like to better understand what goes on in the background of successive JDK releases.

>But that’s not the end of today’s video adventure, because I have another one for you, this time concerning the biggest craze of the last year, namely LLMs, or more precisely the support for Tools in Langchain4j.

Small reminder: LangChain itself is an open-source orchestration software for building applications using large-scale language models (LLMs). LangChain acts as a generic abstraction for linking external data sources and computation to LLMs, making it easy to build applications that use the capabilities of language models for a wide range of tasks, from document analysis to code analysis or chatbots of all kinds. Langchain4j, meanwhile, is its Java variant, created by Dmytro Liubarskyi. I’ve been playing with it myself for a while in conjunction with Gemini, and they are really enjoyable to use – both langchain4j and Gemini itself, which doesn’t perform as badly as some people portray it recently.

But to the point: Ken Kousen published a video last week “The Definitive Guide to Tool Support in LangChain4J” presents LangChain4J’s capabilities in the context of so-called Tools. Tools allow agents (for this is what applications are called in LangChain nomenclature) to interact with the outside world, for example the ability to make HTTP requests.

The whole thing is worth watch – when I have started out with Langchain myself, I had to do a lot of digging around to better understand what possibilities it offers, and here you get everything straight away on a platter. Ken takes you through the process of developing an app that requires LLM to be enhanced with the ability to use the currency conversion API to compare MacBook prices between different local versions of the Amazon shop.

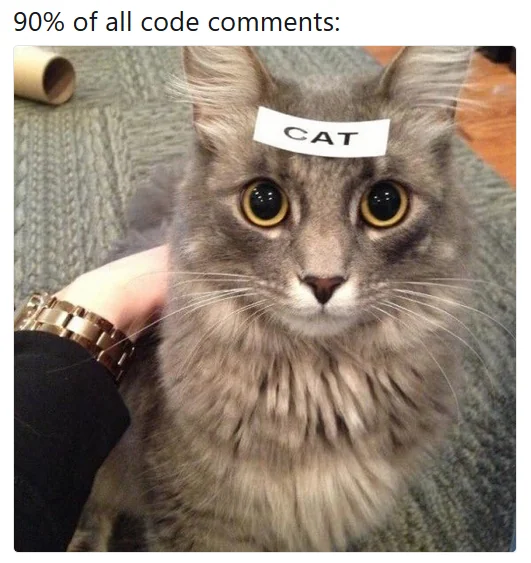

And as we have touched on the topic of LLMs, at the very end I will share a small experiment that Jakub Narloch conducted – automatic generation of comments on Spring Framework code, using an AI tool called CodeMaker AI. The aim was to test the quality and efficiency of the AI’s documentation generation. The tool processed 5001 files, adding 173,466 new comment lines, at a cost of less than $100. The entire repository processing took 371 minutes.

The results of the experiment were surprising even to its original creators. It turned out that CodeMaker AI was able to successfully generate comments. Although invalid @deprecated tags appeared in 18 files, and some comments were badly formatted due to specific project formatting rules, these problems were considered solvable in a relatively short time. The quality of the comments added was reportedly generally high, although James noted the need to further adapt the AI model to improve the accuracy and form of comments. I’ve flicked through some of the generated docs myself, and as an IDE support tool – it even looks interesting, although I think I’d prefer comments for such purposes to be externalized to some external ‘cache’/index and not mixed in with the files – I know on-the-fly generation probably isn’t an option (time and costs of using LLMs), but it would be worthwhile for readability, and in the current version the code is becoming terribly cluttered and verbose.

Either way, the experiment itself is interesting. We’ll see which way the tools evolve for us.

I hope you enjoyed this slightly different, video-based edition. And where did today’s title come from, anyway? Out of nostalgia, to better, simpler times, when AI models were used responsibly and in the service of humanity.