Today Release Radar, Code Reflection and a summary of the 1BRC initiative. Plus a bit of philosophising on my part.

1. 1BRC, or why do we need Moonshots?

Ground Control to Major Tom

Ground Control to Major Tom

Take your protein pills. And put your helmet on…

We’re going to start with a book today – I’m currently hosting the wonderful ‘Chip Wars’ on my e-reader, a book providing a behind-the-scenes view of the microprocessor industry’s growth. The intriguing aspect of this book is that the authors don’t concentrate on the technological aspect (they essentially gloss over that), but instead depict the economic and social conditions that can transform a technical innovation into a genuine revolution. Sometimes, all it takes is a bit of favourable circumstance, particularly real-world challenges, to compel intelligent engineers to push the existing limits of what technology permits. In the industry, we even have a term for this – the so-called ‘moonshots’.

Overall I highly recommend the book, it’s a wonderful read.

Overall I highly recommend the book, it’s a wonderful read.

The phrase ‘moonshot’ within the realm of technology and innovation originates from the significant accomplishment of the Apollo 11 mission, leading to the first human landing on the moon in 1969. This momentous occasion has become a symbol for ambitious, pioneering, and groundbreaking initiatives aimed at accomplishing what was once deemed unattainable. The core of a moonshot is to concentrate on large, audacious objectives; it involves tackling enormous challenges with uncertain results, employing cutting-edge technology and inventive thought to expand the limits of what can be achieved.

The legacy of the moon landing goes far beyond the historic event itself, affecting numerous aspects of our daily lives. The technologies developed for space exploration have led to many derivative benefits for society, from advances in telecommunications and computing to materials science and medical technology. For example, miniaturised electronics (hence the initial reference to ‘Chip Wars’), initially developed for space missions, paved the way for the tiny, powerful computing devices we use every day. Pursuing seemingly unattainable goals, moonshot projects are not only expanding our technological capabilities but also inspiring future generations to continue exploring and pushing the boundaries of what is possible.

This is probably why I remain the last person to root for Meta in their work on Metaverse. The Metaverse (as crazy as it sounds) has the potential to revolutionize the way we live and work. Imagine virtual meetings with clients, regardless of their location (without all the fatigue of staying in one room all day and hopping from room to room), or visiting museums and art galleries without leaving home. These are cool concepts if we don’t focus solely on the risks (although you can’t forget about them either). And the amount of technology that will need to emerge to make this actually work is downright amazing – and these little revolutions are already happening. Just look at how affordably priced relative to the possibilities are their google Meta Quest 3. The lesson to be learned from the aforementioned Chip Wars is that mass is what keeps technology development moving forward. And I continue to believe in VR, even though the whole world is currently making fun of the Apple Vision Pro.

The above image stolen from Ken Kousen and his newsletter Tales of the Jar Side 😃

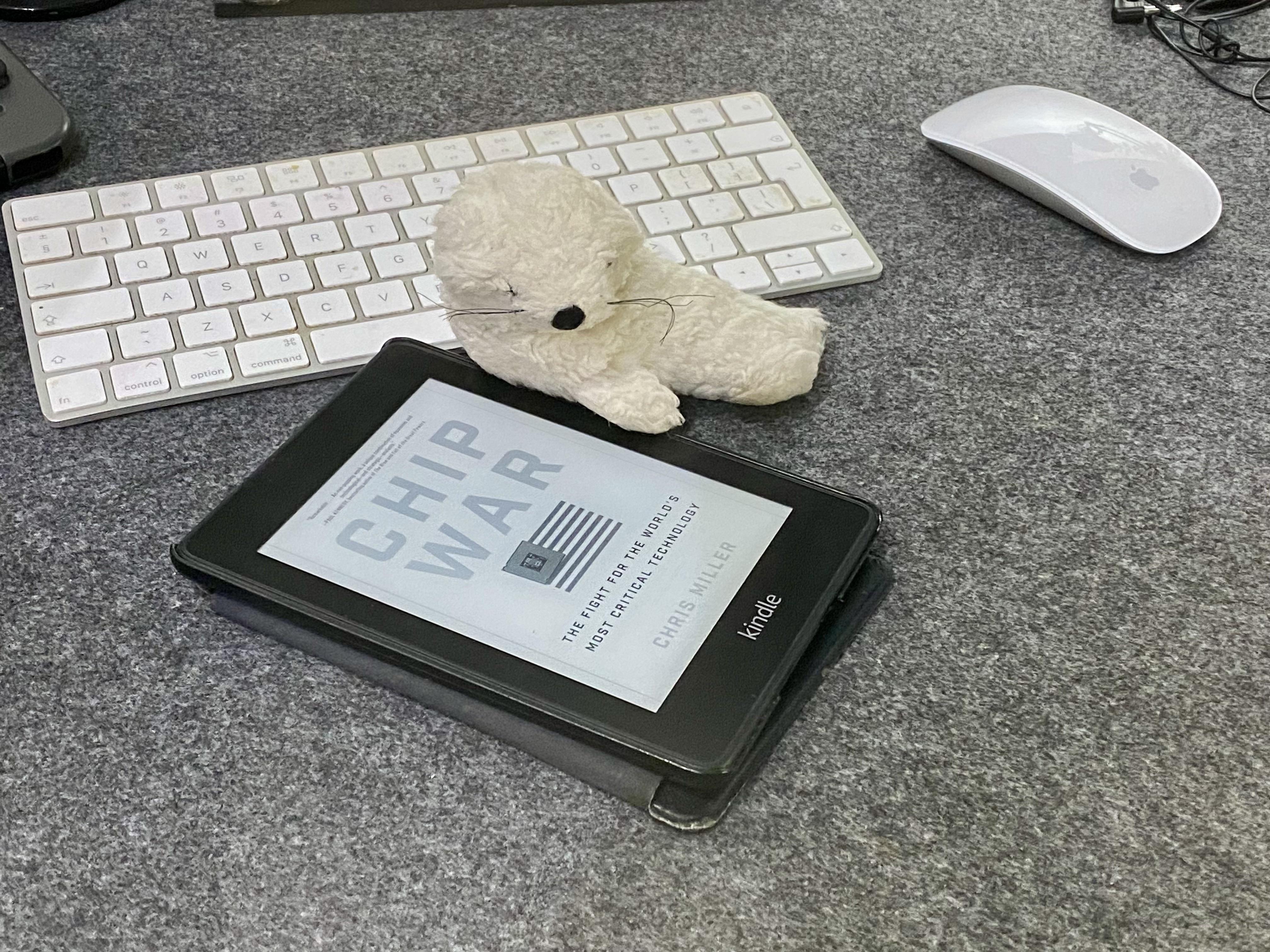

Well, but to the shore, because this is JVM Weekly, and I’m telling the story of a lifetime here. And it all comes down to the fact that last Thursday saw the announcement of the results of the One Billion Row Challenge, which for me meets a mass of the criteria for the moonshots described above. We have an ambitious goal, great project leadership from Gunnar Morling and hundreds of top Java developers trying to push the next boundaries of what we thought was possible when it comes to the performance of applications written in Java. In the end, we managed to go well under two seconds, and these are already cosmic values. I am sure that the results of the competition will be used when planning further development of the JDK.

What’s more, it’s fascinating to see that the majority of top-notch solutions utilize GraalVM Native Images and sun.misc.Unsafe. The former serves as an excellent case study for those seeking proof of GraalVM’s performance – I’ve personally found it beneficial in client discussions. Regarding sun.misc.Unsafe, its value has been demonstrated in our small ‘moonshot’, leaving the next move to the OpenJDK developers. The authors of JEP Draft: Deprecate Memory-Access Methods in sun.misc.Unsafe for Removal likely has a challenging issue to resolve now.

If you’re curious about what black magic has been used, Nicolai Parlog recorded a webinar with the developers of the most efficient solutions. A real knowledge pill for anyone who wants to know the most outlandish details of JVM performance.

Personally, I enjoy most the part where Thomas discusses the reasons why the AoT Grail compiler / JIT + FMM can’t automatically reach the performance level of using sun.misc.Unsafe manually. However, the entire stream is absolutely worth viewing – it’s delightful to see everyone enjoying themselves on this stream.

2. Project Babylon in Practice: Automatic differentiation using Code Reflection

The year 2024 has undoubtedly begun. Just two weeks ago, I discussed the new Design Docs by Brian Goetz on the Deconstructors concept and future of Pattern Matching. Now, we’re ready to delve into the first official text that outlines the potential uses of Project Babylon.

The article titled Automatic differentiation of Java code using Code Reflection by Paul Sandoz, discusses the idea and execution of automatic differentiation of Java methods that represent mathematical functions through Code Reflection. This process involves getting a symbolic representation of a method, which allows a program to apply differentiation rules to create a differential method. To simplify, the entire concept can be summarized into the following points (note that some steps from the article have been left out for clarity):

The method in Java that we aim to differentiate is annotated @CodeReflection.

@CodeReflection

static double f(double x, double y) {

return x * (-Math.sin(x * y) + y) * 4.0d;

}

At the time of compilation, a symbolic representation known as a Code Model is formed for a annotated method. Then, through the use of Java reflection, we gain access to this code model of the it, which takes the form of a tree.

Method fm = T.class.getDeclaredMethod("f", double.class, double.class);

Optional<CoreOps.FuncOp> o = fm.getCodeModel();

CoreOps.FuncOp fcm = o.orElseThrow();Then the differentiation process itself takes place (I’m not going to stick it out, heavy maths – have a look at the original article, and a new code model is created from the differentiated operations, which represents the derivative of the method. The differentiated code model is compiled into Java bytecode, and the compiled bytecode can be executed to compute the derived values.

The effect is the binary equivalent of the following functions:

static double df_dx(double x, double y) {

return (-Math.sin(x * y) + y - x * Math.cos(x * y) * y) * 4.0d;

}

static double df_dy(double x, double y) {

return x * (1 - Math.cos(x * y) * x) * 4.0d;

}

We’re discussing a relatively straightforward, yet not so isolated example. The piece underscores the significance of automatic differentiation in machine learning. In this field, mathematical models are trained using ‘simple gradient algorithms’ which necessitate the computation of gradients. Manually differentiating is prone to errors and is a laborious task, particularly for intricate functions. This makes automatic differentiation an invaluable resource for coders and showcases the upcoming abilities we’ll soon acquire as programmers. As I mentioned in the previous issue, Project Babylon has rapidly ascended to the top of my list of the most intriguing projects occurring within the JDK. I promise to keep an eye out for any new announcements related to it.

3. Release Radar

Pkl

Today’s Release Radar kicks off with something out of the ordinary – a fresh programming language for configuration, courtesy of Apple. This isn’t something you’d typically see on JVM Weekly, right? But rest assured, everything will be clarified shortly.

Pkl (Pickle) is a programming language that is specifically designed for the creation of configurations. It addresses the limitations of static configuration languages like JSON or YAML, which often struggle with complexity and expressivity. Pkl aims to combine the simplicity and declarativeness of static formats with the flexibility of general-purpose programming languages. This enables Pkl to provide classes, functions, conditions, and loops, facilitating the creation of more sophisticated and reusable configuration scripts.

Here is an example to create 4 instances of the database:

module Application

class Database {

username: String

password: String

port: UInt16

}

hidden db: Application.Database = new {

username = "admin"

password = read("env:DATABASE_PASSWORD")

}

sidecars {

for (offset in List(0, 1, 2)) {

(db) {

port = 6000 + offset

}

}

}

The following JSON will generate for us:

{

"sidecars": [

{

"username": "admin",

"password": "hunter2",

"port": 6000,

},

{

"username": "admin",

"password": "hunter2",

"port": 6001,

},

{

"username": "admin",

"password": "hunter2",

"port": 6002,

}

]

}

What’s more, Pkl is designed to be scalable, secure and fun to write, by having built-in validation mechanisms and proper tooling support.

That is, it remains another tool whose existence allows us to hate YAML even more.

Well, let’s return to the initial question raised – what’s the reason for the tool’s appearance in today’s Weekly? The answer lies in the technology employed to develop the CLI that goes along with the language. Nowadays, it’s becoming more and more prevalent to utilize Rust for similar applications, but in this instance, everything was crafted in Java, and disseminated as a binary native GraalVM image. This isn’t a typical format, and Pkl demonstrates that the technology is applicable not just on the server, but also in CLI applications.

CheerpJ 3.0

CheerpJ is a WebAssembly-based JVM implementation enabling Java applications to operate directly in the browser without requiring plug-ins or Java itself to be installed. It supports the complete range of OpenJDK functionality, thus allowing large, unmodified Java applications and libraries to function in most contemporary browsers. CheerpJ also accommodates old Java applets and Java Web Start applications. I recently had the chance to discuss this in the context of the revival of the old browser-based game Dragon Court.

CheerpJ 3.0, the most recent ‘major’ version, has been essentially rewritten from the ground up, leveraging seven years of experience from working on other WebAssembly-based projects like CheerpX (the Flash equivalent of CheerpJ). The third version enhances performance, particularly the start-up time, by eliminating the need for Ahead-Of-Time compilation on the initial run. It provides a WebAssembly JVM and JIT compiler for Java bytecode, along with a virtualized system layer for file systems and networking.

CheerpJ 3.0 currently only provides support for Java 8, however, its creators have already declared that support for Java 11 will be available later this year. To showcase what it can do, the developers have utilized Minecraft as an example, enabling it to operate directly in the browser window.

I’m now waiting to achieve Metacircularity by writing a JVM in Minecraft. Hardware is slowly being created.

Liberica JDK 21 – RISC-V Edition

Liberica is fond of unusual JDK distributions. We already had last year’s Liberica JDK Performance Edition, a variant of JDK 11 with performance improvements from JDK 17, and now comes a JDK variant that runs natively on processors in the RISC-V architecture.

RISC-V is gaining popularity, primarily because of its open-source nature. Contrary to what one might think, RISC-V is not a processor. Instead, it’s a software model of a processor, known as ISA in English. It follows the philosophy of offering a strictly specialized instruction set computer, also known as a reduced instruction set computer or RISC. As stated in Wikipedia:

Unlike most ISAs, RISC-V can be freely used for any purpose, allowing anyone to design, manufacture and sell RISC-V chips and software. Although it is not the first open ISA architecture it is significant because it was designed for modern computerised devices such as huge cloud computing, high-end mobile phones and the smallest embedded systems.

Support for RISC-V has already appeared with JDK 19, but from what I gather Liberica is the first vendor to provide an easily downloadable distribution. If I’m wrong, please correct me in the comments – I’m keen to learn something new too 🙂

Quarkus 3.7

The latest Quarkus 3.7 not only bumps the required Java version up to JDK 17, but also begins the process of tidying up the naming of RESTEasy Reactive dependencies and extensions. This version also improves support for Hibernate, updating Quarkus to the latest stable versions of the popular Java ORM and introducing an endpoint for managing Hibernate Search. It also gets rid of the requirement to use the now deprecated Okhttp/Okio version, and adds support for the @MeterTag annotation allowing Micrometer to be reconfigured.

Quarkus 3.7 is the last release before Quarkus 3.8, which will be the LTS version. So this is also the last chance for the community to test the whole thing thoroughly, which I encourage you to do.

langchain4j 0.26

The most recent version of langchain4j, 0.26, brings forth several innovative core features and integrations designed to boost the framework’s proficiency in language data processing and analysis. Notable new core features include the implementation of an advanced Augmented Generation (RAG) model – that is, the ability to create new answers based on a given source text, or support for multimodal input – that is, combining text and graphics, already allowed by ChatGPT to solve, for example, maths homeworks.

I don’t think anywhere GPT is as overused as in education

I don’t think anywhere GPT is as overused as in education

In terms of integration, this release broadens the framework’s compatibility with various model providers like Mistral AI, Wenxin Qianfan, and Cohere Rerank, as well as ’embedding stores’ such as Azure AI Search, Qdrant, and Vearch. Document loaders now offer support for Azure Blob Storage, GitHub, and Tencent COS. Moreover, significant updates include support for image input and truncated embeddings in OpenAI, Ollama and Qwen plus image generation in Vertex AI.

Javalin 6.0

Javalin 6 represents the most recent iteration of the well-liked web framework for Java and Kotlin, highlighting the ease of use and compatibility between these languages. Essentially, it serves as a frontend to the Jetty server, with its main focus on the web layer. Javalin is notable for its compact size and comprehensibility – its source code consists merely of approximately 8,000 lines of Java/Kotlin code, in addition to roughly 12,000 lines of tests.

I always respect tools whose inner workings can be understood over a long breakfast.

Javalin 6 introduces many new features, including a complete rewrite of the plugin system or a remodelling of Jetty’s configuration. The default use of Virtual Threads has also been disabled, with the new version requiring the config.useVirtualThreads = true flag and a compatible version of the JDK. The AccessManager interface has also been removed and the approach to permissions management has been changed, which is the main part described by the migration guide accompanying the release.

WildFly 31

WildFly 31 includes the upgrade of MicroProfile to version 6.1, Hibernate to version 6.4 and the introduction of support (in Preview) for Jakarta MVC 2.1 through the Eclipse Krazo project. In addition, Reactive Messaging now enables communication via AMQP. Under the hood, WildFly has also received changes to modularisation, enabling easier development of extensions and transparently defining the stability of individual fragments.

In addition to server updates, WildFly has also declared the beta availability of WildFly Glow. This is a toolkit designed to streamline and enhance WildFly installations in various environments, primarily those that are cloud-based. WildFly Glow examines application artifacts to suggest the required functionality packages and Galleon layers (Wildfly’s Docker image support), ensuring a seamless and effective server configuration.