A week ago, we mainly focused on new releases. Today’s edition, however, is fully dedicated to the recent surge in low-level updates.

1. What’s new in Project Leyden?

Certain projects in the JVM have wider awareness than others. Loom and Valhalla are names most people are familiar with, and Panama might also ring a bell for some in the community, even while I assume that many would prefer not to engage with it too extensively 😀. On the other hand, there are less popular projects like Leyden, which are intricately linked to the technical side of the JVM. Despite their specialized focus, they are, in my opinion, extremely intriguing. I’m pleased to share that, armed with the latest information directly from the JVM Language Summit, I can once again provide an overview of the objectives and also highlight the most recent progress of this project.

Leyden introduces a significant transformation to Java optimization by implementing a method originally described as ‘shifting and constraining’ of computation. Instead of solely depending on runtime compilation, Leyden moves some of the required optimization processes to earlier stages (like Ahead-of-Time compilation, Application Class Data Sharing time or Linking), based on the specific program and runtime environment needs. After all, we exist in an era of autoscaling, clouds, and various kubernetes-like environments, with much different needs.

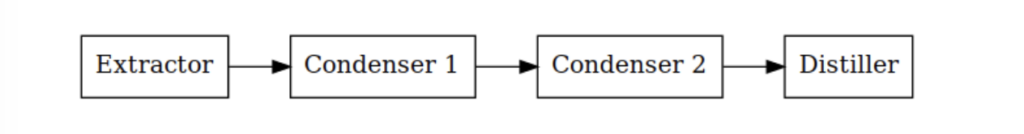

The primary new component introduced by Leyden is referred to as ‘condensers’. These can be viewed as specialized tools that executes in a sequential manner, enhancing the application code. The procedure begins with a basic application configuration. As it passes through each condenser, transformations are implemented until the final stage is achieved: an optimized version intended for efficient execution time.

To draw a comparison, the mechanism of Leyden’s condenser is similar to middleware in web frameworks like Express.js for Node.js. In the realm of web development, middleware intercepts requests, processes them, and may even modify them before they arrive at their final destination. In a similar vein, Leyden capacitors take ApplicationModel – a fixed representation of the application – and generate an upgraded version of it. This method guarantees that the original model stays intact, while optimizations yield new, enhanced versions. As you see, barebone condenser interface is fairly straightforward.

interface Condenser {

ApplicationModel condense(ApplicationModel model);

}

Certainly, the entire magic is rooted in the ApplicationModel class, but those who are interested are urged to read the article Toward Condensers by Brian Goetz, Mark Reinhold, & Paul Sandoz. There, you will also discover more details and code examples. I’ll provide another sample here, which I believe effectively demonstrates the use of:

@Override

public ApplicationModel condense(ApplicationModel model) {

ModelUpdater updater = model.updater();

Stream.concat(model.modules(), model.classPath())

.flatMap(model::classes)

.forEach(classKey -> {

ClassModel cm = if (cm != null) {

/// do transformation on Classes

updater.addToContainer(classKey, ClassContents.of(cm));

}

});

return updater.apply();

}

However, Leyden doesn’t solely depend on static transformation. Java currently employs the so-called Tiered Compilation strategy to reach optimal performance. It starts from Level 0, where the JVM starts interpreting bytecode, and applications gradually advance to Level 4, which already utilizes optimized code. The subsequent stages are achieved while the application is in operation. If you require more information, a comprehensive text titled How Tiered Compilation works in OpenJDK on this subject was recently published by Cesar Soares from Microsoft. As you probably guessed from this section theme, Leyden enhances this process.

The previously mentioned update from the JVM Language Summit introduces what are known as ‘training runs’. These are simulations of an application’s performance used to gather behavioural data. By examining the application’s behaviour in various scenarios at runtime, condensers can gain crucial information about the application’s behaviour in a production setting. In this manner, the enhancements made are not solely based on theoretical models, but are grounded in actual application dynamics – as is the case, for instance, with Profile-Guided Optimizations (PGO) in GraalVM. By the way, GraalVM, with its native images, would also be considered as yet another condenser in the future.

Leyden utilizes the data obtained from the ‘training runs’ to implement crucial optimizations even before it begins operation. As a result, when a standard tiered Java system initiates an application, it already possesses an advantage. This anticipatory strategy not only accelerates the ‘warm-up’ phase but also facilitates extended durations of optimal performance.

If you’re interested in seeing Leyden in action, Brian Goetz has shared an analysis Condensing Indy Bootstraps. It illustrates the entire process, specifically in the context of generating extra code for elements like lambdas using Invoke Dynamics instructions. However, understanding this requires a basic knowledge of how the invokedynamic instruction or the CallSite and MethodHandle objects function. Additionally, there’s an advanced text demonstrating the use of the still-in-draft Computed Constants, a topic I discussed two editions ago. Consider both of these resources volountary reading. 😄

2. What’s new at Project Valhalla?

It’s been a while since Valhalla was brought up, right? However, that doesn’t imply that there hasn’t been any intriguing developments. The project is still evolving, and with each new version, it’s becoming progressively simpler. In June, I discussed how to simplify the handling of nullability, and now the simplifications are happening at the VM level. To fully grasp what’s going on, let’s delve into some theory.

The L-types, often referred to as classic reference types, are fundamental descriptors of Java classes within the Java Virtual Machine (JVM). These have been the go-to option for programmers over the years. When a standard Java object is instantiated, it resides in memory, and at the code level we manipulate its reference. This process guarantees that any operations performed on the object alter its state in a centralized location in memory.

Valhalla introduces a concept known as Value Classes. These are essentially data containers that do not possess their own unique identity. To illustrate, consider a value class that represents a point in space, defined by X and Y coordinates. If two instances have identical coordinates, they are indistinguishable. This is in contrast to traditional objects, where each object maintains a unique identity, even if the data they contain is identical.

Why is this significant? By transferring data by value can enhance performance, particularly when data-intensive applications. In addition, it provides more predictable and succinct behavior, especially in multi-threaded environments, since there are no side-effects linked with references.

However, the implementation of value classes was not a simple process. At first, it was thought that new JVM-level Q-types and the so-called bytecode v (variants of most operations with the prefix v, for example, vload being the same as the existing aload) were needed to work with these new data structures. This would have necessitated developers and the JVM to operate on two distinct sets of internals, potentially complicating the code and diminishing the advantages of using value classes.

As the Valhalla project evolved, it became clear that traditional L-types, with certain adjustments and enhancements, might be able to represent Value Class. This means that there may not be a need for separate Q-types and a unique bytecode v, ensuring that the adoption of Value Class doesn’t lead to significant system-wide alterations or extensive recompilations. The article new/dup/init: The Dance Goes On by John Rose, published last week, further explains the specifics of how this proposed simplification impacts object creation.

Summarizing, the design of Java Virtual Machine (JVM) favors a clear and simple model. The inclusion of complex elements like Q-types and v-bytecode makes the system more challenging to maintain. The latest version of Valhalla streamlines the design and minimizes future technical debt by preventing unnecessary duplication that impacts the entire system.

3. Project Hermes – new network data transfer protocol.

And finally, something that transcends the JDK itself, yet remains within the realm of low-level components.

The concept of Big Data has significantly evolved since it was first introduced two decades ago, based on the MapReduce programming model. Nowadays, numerous predictions suggest its demise, as seen in e.g this viral articleBig Data is Dead. However, it appears to me that experts in this area have no reason to worry in the near future, despite the world consistently discovering new darlings.

Why am I discussing this topic? It’s because many big data solutions are developed in Java, as these systems are typically designed to operate on multiple servers in cloud computing, where scaling by adding units is more cost-effective than purchasing expensive supercomputers. Java performs exceptionally well in this context. However, horizontal scaling, which is common in these systems, has its challenges. Operations like reduce, aggregate, and join often require intensive data transfer over the network, which can create a bottleneck. The Fallacies of Distributed Computing, despite the years since their publication, still hold true. Cloud providers now offer advanced InfiniBand networks that can achieve throughputs of up to 200 Gbit/s with minimal latency. However, Java’s network model, which is based on TCP/UDP sockets, introduces additional overhead when using InfiniBand. Libraries such as JSOR or jVerbs solve this problem by giving Java direct access to InfiniBand, but they require application modifications and are based on the Java Native Interface (JNI).

This is where Project Hermes by Michael Schoettner, Filip Krakowski and Fabian Ruhland from the University of Düsseldorf comes into play, aiming to modernize Java’s networking support with an ultra-high-speed communications solution. This initiative builds on the Panama Project and the Foreign Function & Memory API, which you might be familiar with, with the goal to eventually replace the error-prone JNI. Hermes is introducing two open-source libraries: Infinileap, providing Java with a direct interface to the native UCX library that supports various networks, and hadroNIO, offering Java NIO support based on this library. After testing on Oracle’s cloud infrastructure (since Oracle is one of the project’s sponsors, even though this isn’t officially a JDK project), Hermes has shown significant performance improvements for Netty-based applications in the initial results. Future plans include more comprehensive scalability tests and the introduction of an independent API for Infinileap for different programming languages, using the Sidecar pattern.

This is another aspect that, while not immediately apparent, will promote the use of Java in the most challenging tasks. It’s a low-level piece, but crucial for the entire puzzle. As is usual with scholarly projects, a whitepaper titled Accelerating netty-based applications through transparent InfiniBand support has been released, where you can find more information.

I strongly support the idea of naming projects after mythological themes, particularly when it’s executed in such an clever manner.

In conclusion, I’d like to share a personal recommendation. One of my first ever articles was We tried Groovy EE – and what we have learned from it. In this text, I discussed my experiences with Groovy in the framework of Java EE applications. I recall learning about this language from the book Making Java Groovy by Ken Kousen. Hence, I am delighted to recommend his Newsletter, which you can find on Substack under the title Tales from the jar side.

The content provides a superb source of knowledge, not just about Java (which is discussed in a broader context than merely the technical aspects of the JVM and JEP), but also includes deep analysis and other content recommended by Ken. It’s important to note that each issue of the newsletter also comes with its own video version and amusing discoveries from around the internet. If you appreciate the humor in this newsletter, you’re likely to enjoy Ken’s material as well.

I strongly recommend that you read Tales from the jar side 😀