Today a mix of my two favorite topics – AI and retro gaming ❤️. In addition, about the big change in YouTube leadership and the new version of GitHub Copilot.

1. MarioGPT: What are Text2Level models?

I suspect you’ve all heard of Mario, but I’m sure that many missed the release of Super Mario Maker. It is a game about the blue plumber released in 2015 by Nintendo for the Wii U console, which allows players to create their own Mario levels using “blocks” from older Mario games. Then, you can share them with other players around the world, giving access to an essentially infinite number of levels. As of now, there is an even simpler tool to ensure a steady supply of platforming entertainment.

A team of computer scientists from the University of Computer Science in Copenhagen has developed MarioGPT, an approach based on the GPT-2 language model that can generate Super Mario Bros. levels. The system uses textual prompts (similar to one used for rendering graphics in Dall-E and similar), and converts them into playable levels, The researchers trained it with samples from the original game and the somewhat forgotten Japanese Super Mario Bros 2. The code and instructions for coding and generating levels are available on GitHub, allowing users to create any variation they want.

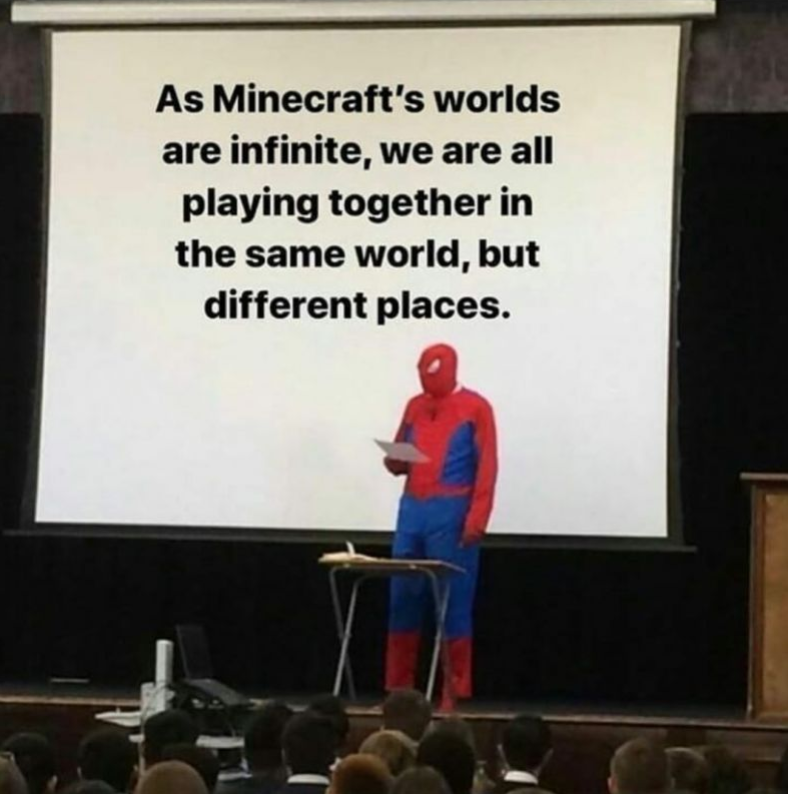

Pretty impressive, right? Interestingly, the idea of generating a game from pre-made blocks is not new, as the so-called procedural games are based on it. This is a video game in which content generation is done using algorithms (rather than deep models), instead of hand-created levels. In them, the game world is generated based on rules and parameters programmed to create random but consistent and logical levels, and sometimes characters, enemies, or items. It was the procedural generation that Diablo, for example, was based on, and can be found in many Indy productions, including one of the biggest hits of recent years – Minecraft.

Examples of procedural games can be found here, and their use is growing. As an example, in Assassin’s Creed: Odyssey, UbiSoft generated entire questlines for the player in this way, making the game perpetually have new challenges for the player.

This, by the way, is not the only marriage of ML, academia, and Mario. An artificial intelligence called MarI/O learned to play Mario on a superhuman level a few years ago (why do I even remember such things), and that fact became viral. Now, having merged the two models, we have a solution for rebellious AI – if it starts to fidget, we’ll lock it into a perpetual cycle of creating new levels and passing them immediately.

Sources

- MarioGPT: Open-Ended Text2Level Generation through Large Language Models

- shyamsn97/mario-gpt

- 10 Best Games With Procedural Generation

2. Changing of the guard at the position of YouTube CEO

We’ll start with the facts: Susan Wojcicki is stepping down as CEO of YouTube after heading the world’s largest video site for nine years. Her role will be taken over by Neal Mohan, the company’s chief product officer. Wojcicki reported that she will focus on family, health and personal projects. Her departure is symbolic for Google and technology in general, as she was one of the few women to lead a huge technology business. She was also integral to the founding of Google, as one of the co-founders rented her Silicon Valley garage to Larry Page and Sergey Brin in 1998.

It was during her tenure that YouTube became such a huge part of today’s tech (and beyond) world. She also navigated YouTube through difficult times, such as the adpocalypse of 2017 and the firing at the company’s headquarters in 2018. While some creators were frustrated by her methodical, data-driven decision-making, others saw her as steering the ship toward the polar star. It was she who popularized the use of so-called “North Star Metrics.”

And it is the latter term that will probably be associated with the greatest controversy surrounding her. It was during her time that the main metric guiding the company was “user engagement,” which provided the portal with incredible growth but also had its dark side. One of the biggest controversies surrounding Wojcicka is the way YouTube handles content moderation. Critics say the platform is slow to remove videos containing hate speech, misinformation, and extremist content. Some have accused Wojcicka of not taking these issues seriously enough, in pursuit of keeping users on the platform for as long as possible.

And speaking of controversial CEOs, last week the tech and gossip press made headlines with the news that Elon Musk had forced changes to Twitter’s algorithm so that it would serve his own tweets to the users more often. The matter was described by, among others, Casey Newton of the newsletter “Platformer”. Elon explains that it was actually simply fixing a bug that caused the distribution funnel to “clog up” when there were too many recipients. What is the truth? Personally, I suspect a mix of both reasons – the problem probably existed, but it was the CEO who supported the “prioritization” of its repair. This is because the argument ties in with what we publicly know about Twitter’s architecture.

And if you’re intrigued by how Twitter works underneath, I’ll take the opportunity to once again toss in the invaluable Design Primer System – Elon Musk’s company is one of the (simplified, of course) examples that appear there.

Sources

- YouTube CEO Susan Wojcicki is stepping down

- Elon Musk fires a top Twitter engineer over his declining view count

- System Design Primer

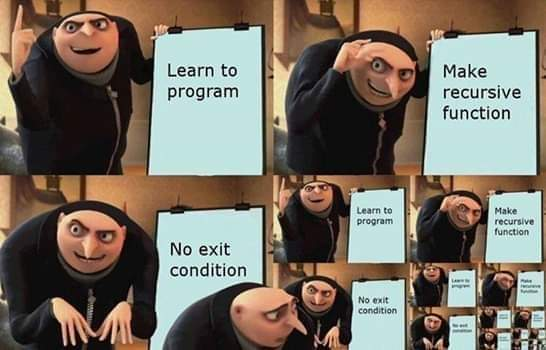

3. Github Copilot has received a new release

Everyone has been talking about image generation and ChatGPT lately, but don’t forget that it was GitHub Copilot when GPT-3 went viral. Now, GitHub that announced updates to its AI-based code suggestion tool. It includes an improved AI Codex model that provides better code synthesis, and better contextual understanding through the Fill-In-the-Middle paradigm, and introduces a lightweight client-side model that reduces the frequency of unwanted suggestions. In addition, a new security vulnerability prevention system has been launched that identifies and blocks unsecured patterns in real-time, such as hardcoded credentials and SQL injections. These updates aim to make GitHub Copilot more efficient, responsive, and secure for developers.

At the same time, a “business” version of the tool also appeared. What sets it apart is the additional functionality related to security in the broadest sense. This is because GitHub Copilot for Business now works with VPNs, including self-signed certificates, so developers can use it with anyone including corporations or banks. The tool creators also boast that this version also includes “industry-leading privacy,” but the post accompanying the release doesn’t lean a single word on that, so we don’t know any details…

And as with GitHub Copilot, there is again some much-discussed stuff going on in the context of its brother, ChatGPT.

Microsoft recently announced its new ChatGPT-powered version of its Bing search engine. And just as Google was heavily hit for the bugs that happened during the demo of their competitor to this technology (which I wrote about a week ago), now that the waiting list has been given wider access to Bing, it has become apparent that the OpenAI model is not at all better. User testing shows a lot of bugs e.g. crazy financial numbers. What’s worse, Bing has even started to “gaslight” the people who use it, trying, by all means, to convince them that those using it are wrong, not the model.

As I wrote a week ago, this is no surprise. Large language models like Bing have no concept of “truth” and only know how to complete a “statistically likely” sentence based on input and training sets. As a result, they can make things up, state them with conviction and often make mistakes – these are called hallucinations. Having an AI-assisted search engine is a great idea, but there must be a reliable fact-checking mechanism to verify the model’s answers – and creating one is very, very difficult. I will echo last week’s edition that we have opened a pandora’s box, and 2023 will be very interesting.

If you want to read about the many examples of Bing’s toxic solutions (as well as the ways Microsoft is trying to mitigate situations somewhat) I recommend reading the text with the ominous title Bing: “I will not harm you unless you harm me first”.