We’ve been with you for 100 editions! In today’s – anniversary one – we cover topics you know we like (processor market, US, China, DALL-E) and a new standard for floating-point numbers.

1. CHIPS as a way for the U.S. to return to leadership in the processor market

Let’s start with an event that will indeed appear on lists of the most critical moments of the technology world in 2022 (unless 2022 won’t have something hidden in his sleeves ). President Joe Biden has signed into law the massive “CHIPS and Science Act,” which will provide the United States with government subsidies for industry development and R&D departments. As much as $52 billion will be earmarked for the processor and semiconductor-related market alone. An accessible breakdown of the bill can be found in the text Breaking Down the CHIPS and Science Act. If you are interested in the political aspect of the whole thing, I refer you to Ben Thompson’s sensational publication from Strachetery called Political Chips.

Because I have to admit that this political aspect is perhaps the most exciting part of CHIPS. The last two years have shown how impervious to problems in our economy’s processor supply chain (especially particular divisions of it, such as the automotive industry). The Global World is also becoming less… global every month, which is why it’s not surprising to see such and not other actions by the US. Last week the whole world was following tensions between the US and China in the context of Taiwan. And while there’s been a lot of talk about the dependence on electronics manufacturers like TSMC, it shouldn’t be forgotten that China, too, has key plants on its soil whose work is essential to, for example, the launch of new iPhones. So it’s not particularly surprising that Apple has warned its suppliers that China has stepped up enforcement of a long-standing import rule “that parts and components made in Taiwan must be labeled as being made in either ‘Taiwan, China’ or ‘Chinese Taipei.'” Sitting on a ticking bomb, no one wants to give an excuse. Especially since China has criticized even the CHIPS law mentioned above as “isolationist.”

In this context, I’ll just mention at the end of this section that interesting things are also happening in China in the context of integrated circuits. According to MIT Technology Review, at least four representatives of several top semiconductor fund executives have been arrested for corruption charges. This is because, as in the case of the US, the Chinese government is also heavily trying to subsidize semiconductor companies. The MIT article describes how initiatives are being developed at the intersection of private industry and public money in the Middle Kingdom, which will make interesting reading for those trying to understand the Chinese technology market better.

Sources

- Political Chips

- Biden signs $280 billion CHIPS and Science Act

- Breaking Down the CHIPS and Science Act

- Senate Passes $280 Billion Industrial Policy Bill to Counter China

- Apple tells suppliers to use ‘Taiwan, China’ or ‘Chinese Taipei’ to appease Beijing

- Corruption is sending shock waves through China’s chipmaking industry

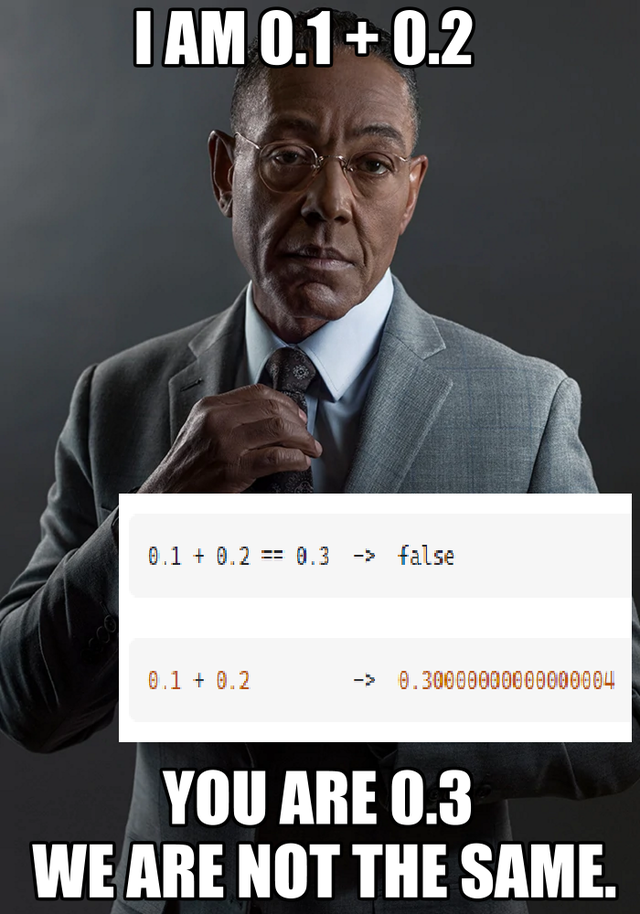

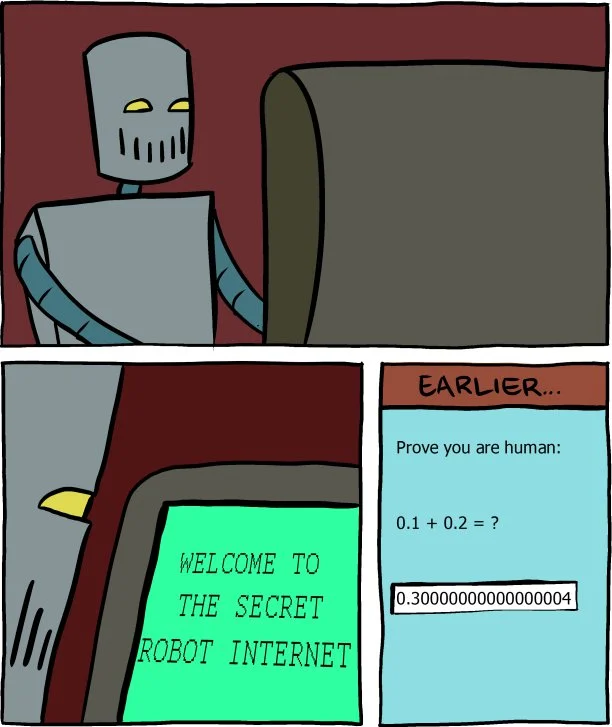

2. New standard for floating point numbers

The relationship between floating-point numbers and computers is fascinating. The popular “floats”, on the one hand, are everywhere, on the other hand, they cause a mass of problems and spread traps that every novice programmer hopes to encounter at some point. “Subtraction in JavaScript is broken!”, “don’t use floats to represent money, because they have too little computational precision”. We are used to the fact that numerical operations should be precisely precise, especially in the world of computers. And here it turns out that in one of the most popular formats, rational numbers (such as 0.1, or 1/10), whose denominator is not a power of two, cannot be accurately represented. Why? This is already a topic for a longer discussion; I recommend an explanation of the subject you can find on 0.30000000000000004.com or Floating Point Visually Explained, and if you are still not enough – What Every Computer Scientist Should Know About Floating-Point Arithmetic (but this is already harsh bull, so you know – at your own risk).

Okay, but why am I coming down today to one of Computer Science’s “college” problems? After five years of work, Posits – a standard for better floating-point numbers – has been published. The main “pitch” for Posits is that typical numerical calculations maintain better accuracy for a given number of bits. Or conversely, you can use fewer bits to achieve a given level of accuracy. It’s also supposed to be much simpler to implement than current floats, whose standard from IEEE is just insanely complicated (as those who dared to click the above link from Oracle already know).

Do I see any great future for POSIT? Considering how difficult it is for us to iterate on standards as a civilization – rather not “on my watch”. After all, most existing hardware has FPUs (Floating Point Units), which are responsible for IEEE float computations. Hence, developers have an uphill battle at the start. However, there’s no denying that the very fact that such alternatives are being developed is intriguing in its way, so I couldn’t refuse to include this information in our round-up. And if you are in the mood for more, a very interesting discussion about the new standard took place on the aggregator lobst.ers.

Sources

- POSIT Standard

- 0.30000000000000004.com

- Floating Point Visually Explained

- What Every Computer Scientist Should Know About Floating-Point Arithmetic

Will there be job openings for Dalle-2 “Prompters” in the future?

We started with one of my favorite topics, so we’ll end on that, too – I also got to celebrate the 100th edition.

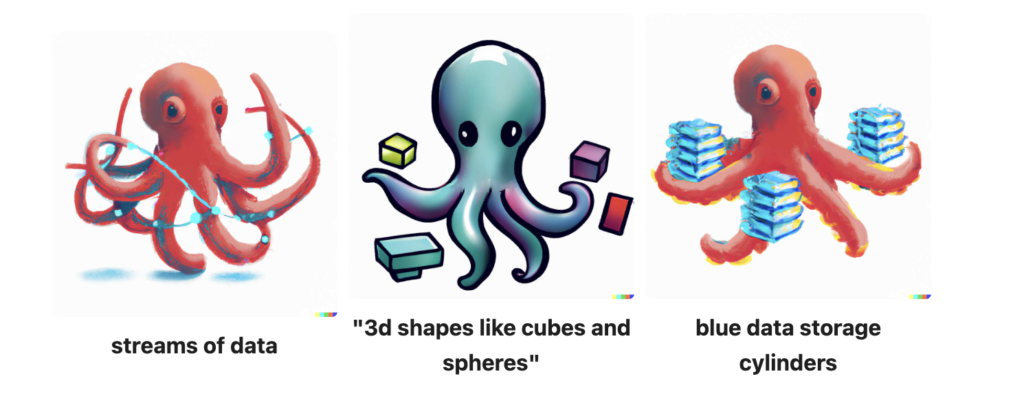

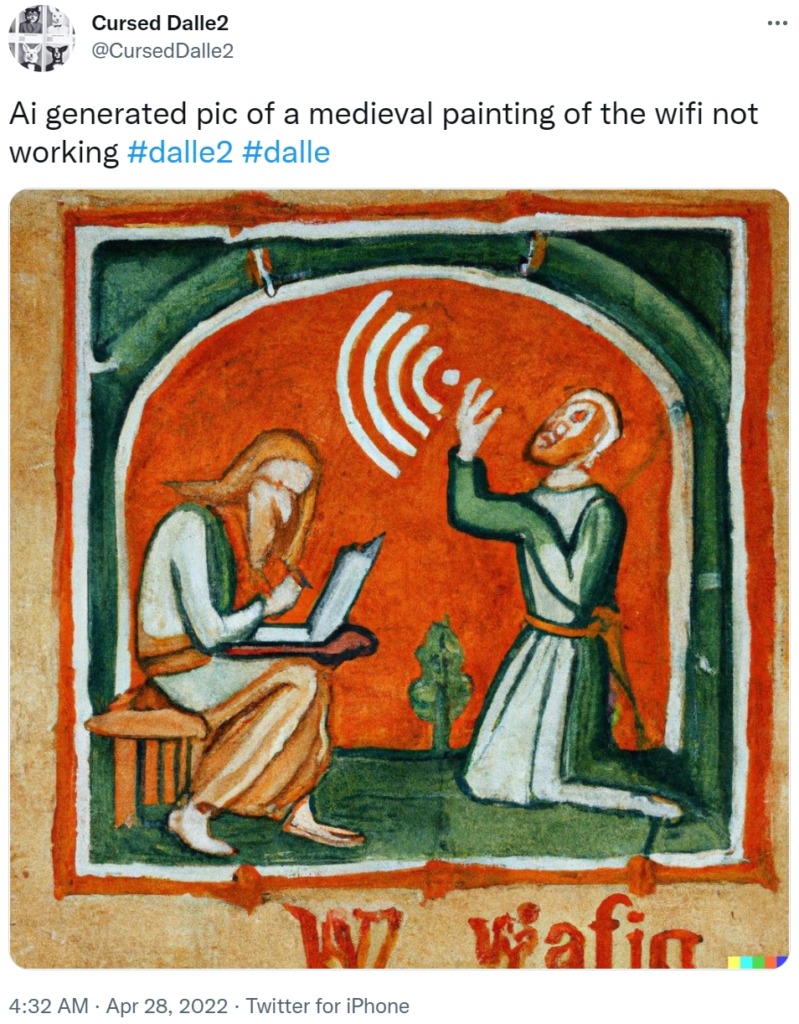

There is no shortage of interesting creative ways to use Dall-E on the web – the model has long managed to go beyond the simple standard generation of creative graphics. For it turns out that its capabilities can also be used in other applications, such as generating a project logo. The process of creating such a one and the challenges the author encountered along the way can be found in How I Used DALL-E 2 To Generate The Logo for OctoSQL. For example, if you also need a logo for some side-project, the post suggests some tricks you can use.

Creating a functional logo is already a pretty “big deal,” so I wondered if we will someday get such roles as “prompt author for artificial intelligence”? After all, a free publication called DALL-E 2 Prompt Book was recently released, outlining in a structured way how to build queries to get the desired result from artificial intelligence. It turns out that although at first glance the results spit out by DALL-E are highly random, it is possible to add a pinch of predictability into the whole process.

In all this talk about DALL-E and generating artificial art, we are lost somewhere (probably under the influence of the spectacular artifacts generated by the model) conversation that until recently seemed to be hot – are DALL-E works “Art”? I don’t want to descend into definitions of what “Art” is here, but from my perspective, a rather interesting observation in this context is that all indications are that DALL-E 2 is just “gluing things together” without understanding their relationships. That’s the title of the article, which tries to prove that, given our human perspective on object relationships, DALL-E does not have one and simply uses a slightly cleverer “copy-paste” method. The article contains quite a few examples of rather amusing errors, which show that artificial intelligence is very fond of losing the context of the events. Indeed, this argument alone will not settle this conclusively, but I suspect it will give the “reductionists” some ammunition in their hands.

And we’ll end today’s edition with another model created by Open AI. I’ve never played Minecraft – I’m saving exploring this world for playing together with my daughter when she gets a little older. It doesn’t change what a fascinating game Minecraft remains after all these years and how many possibilities it gives the player. The fact that Open AI was able to teach artificial intelligence to play Minecraft – or, more precisely, to create some of the most complex tools in the game – is all the more delightful. You can see the entire process in a video from Two-Minute Papers, where Károly Zsolnai-Fehér provides details of the process. What’s a time to be alive!

Sources

- DALL-E 2 Just ‘Gluing Things Together’ Without Understanding Their Relationships?

- OpenAI’s New AI Learned To Play Minecraft!

- DALL-E 2 Prompt Book

- How I Used DALL·E 2 to Generate The Logo for OctoSQL

PS: We apologize for spamming you with notifications. It was related to the fact that we wanted to provide you with a still working Polish version despite the change of the communication language of vived.io to English, and we got a bit blasted 😉

PS2: we are working on RSS in a bilingual version. If you are using one, you can go to the blog page – you will find options to switch the language.