Last week, we didn’t have our summary, so I hope you missed it! As a reward for your patience, I’ve got a ton of topics for you – the future of Project Amber and the present of Panama, a new (controversial) license for Akka, and several releases, including the official launch of Níma.

PS: If you are looking for a Polish version, you will find one here. If you are reading this post from within the Vived app – Polish translations are coming back soon 😉

1. Can the Panama Project serve as a NIO 2.0?

Before we even focus on the flood of news (and there’s been a bit of that over the past two weeks…), as a warm-up, I wanted to share a fresh new approach to the Panama project. I long associated Panama only with applications that require access to native memory, other system resources, or inter-process communication. This is in large part because, in my mind, Panama is understood as a collective abstraction over JNI and sum.misc.Unsafe, given a new, more secure API.

I suspect I’m not the only one. So it seems that the title of Panama: Not-so-Foreign Memory that Gavin Ray gave to his article hits the mark – because Project Panama brings a bit more besides better native interoperability. This makes it worthwhile also where we do not suspect it at first glance.

Have you ever played with NIO? Under this acronym is Java Non-Blocking IO, which was one of the most essential additions back in the days of Java 1.7. If you don’t have much experience with it, after all, don’t feel FOMO – this is an API used mainly by asynchronous libraries like Netty or database drivers. For the OrdinaryUser™️, it is severely inaccessible, and you are unlikely to want to see its use in typically “business code”.

This is why I liked so much the text by Gavin Ray, in which he shows how the MemorySegment API introduced in Panama can be used in places where it was previously necessary to use NIO. The text shows that in the case of the database drivers mentioned above, one can change the approach and treat data not as a continuous stream of bytes but as discrete packets with a specific structure. In this way, we get much more support from the language and additional security in the form of typed memory structures. More can be found in Gavin’s text, which is surprisingly accessible, given the topics it touches on.

At least provided you don’t associate Panama mostly with hats 🤷🏻

Sources

2. What does the future hold for Project Amber?

After Panama, we come naturally to Project Amber, which aims to provide the best possible Developer Experience to the entire community. Brian Goetz – a true JVM titan – is bored or procrastinating so that Valhalla sometimes never comes out (sorry Brian, I had to) because, in recent weeks, Amber’s mailing lists have been flooded with new ideas from him. So let’s take a look at what we find in the proposals.

Let’s start with the so-called “unnamed variables.” If you have any experience with Scala then – first of all – there will be a little more in this issue for you, and – secondly – you are probably familiar with the underscore operator _. It is used when the name of a parameter is not essential for the code itself – somewhat simplified use compared to Scala is also, for example, it in Kotlin and Groovy. Java has historically had several approaches to a similar syntactic construction, but ultimately nothing came of them. Now Brian would like to revisit the topic and is asking for feedback on various potential uses of this structure. Suppose you’re curious as to why this is so complicated that historically the initiative has already died several times. In that case, I encourage you to check the thread on the mailing list.

Another email concerns further development of Java Pattern Matching. After switches or records, Brian Goetz plans to ease destructuring of another Java entity – arrays. As he rightly points out in correspondence, they (like records) are basically just one more type of contender. As a result, plans have emerged to allow different matching behavior based on the array’s size and contents. The email also shows synergies with the _ operator from the previous mailing.

In general, the whole thing is supposed to replace quite complex structures, for example:

x instanceof String[] arr

&& arr.length matches L

&& arr.length >= n

&& arr[0] matches P0

&& arr[1] matches P1

...

&& arr[n] matches Pnwith simple

String[L] { P0, .., Pn }From here, we move seamlessly to the last of the Amber-related emails, which aims to introduce pattern matching for primitive types. The above syntax using instanceof is problematic because…. primitive types don’t have instances, and using this specific operator conflicts strongly with that fact. It turns out that finding a good alternative is not such a simple process at all, given the complicated AutoBoxing and casting rules. Like the above, the discussion here is super interesting (along the way, it deviated into heavily philosophical arguments like the real meaning of the instanceof operator), and if you want to learn some of the obscure details of Java, I highly recommend wading through this thread of nearly fifty emails.

And while we’re on Mailing Lists – here’s a LastOneThing™️. Well, it has been announced that with JDK 20, we will finally lose the ability to target our build as JDK 7 compatible. As JDK 7 itself is losing support completely, this decision is a de facto seal of the fact from the JVM internals side.

Sources

- Unnamed variables and match-all patterns

- FYI, planning to drop support for -source/-target/–release 7 from javac in JDK 20

- Array patterns (and varargs patterns)

- Primitives in instanceof and patterns

3. Akka ceases to be fully open-source, changes license

Well, that heavily-technical stuff is behind us; now it’s time to move on to the last week official drama.

Akka is one of the mainstays of Scala these days and one of the main reasons why this is the language that companies are interested in. It is one of the industry’s best implementations of the actor model, which, mixed with the support of a mature (and well-known in the industry) platform – such as the JVM – is a very tempting combination. That’s why the news that starting with version 2.7, Akka is changing its licensing model from Apache 2.0 to BSL v1.1 (Business Source License), reverberated in the industry. The license change marks the closure of Akka’s development phase based on the so-called “Open Core.”

What does BSL mean for Akka in practice? Well, new versions will continue to be released under Apache 2.0, but with a considerable delay – three years one. Until then, each new version will admittedly be made available with the sources, but it will be free to use only in non-production environments. If we want to use Akka in a production system and our company’s annual revenue exceeds $25 million, we must pay license fees. Their prices start at around $2,000 USD per processor core, defined as hardware core / vCore / vCPU. If we want to modify Akka for our needs, the license will cost us $72,000 USD. The whole thing is a bit more nuanced, so for details of the pricing, I refer you here.

The makers of Akka have issued a special post explaining their decision. What we can find inside is the traditional problem of a small company whose output is used by large corporations essentially without giving anything from themselves. Akka has become an artifact of its time – today, popular open-source projects like Deno or Vercel enter the Venture Capital market very quickly, immediately trying to monetize themselves by, for example, creating a relevant infrastructure product. Lightbend also tried this path, investing in Akka Serverless, which was renamed Kalix in May this year and released as a separate product. Apparently, it’s challenging to do so retroactively.

I realize what a panic in the community Lightbend’s decision has caused (let the number of discussions that appeared on popular aggregators testify to this). Still, Akka’s decision is understandable. Akka simply “gets the job is done” – which is why it is one of Scala’s backbones. Lightbend is also not the first entity to decide on a similar move. The beginning of last year was marked by the Amazon vs. Elastic conflict, where the latter decided on the SSPL (Server Side Public License), which has aimed acutely at limiting cloud providers’ use of OpenSource projects. At the same time, I have a bit of concern about whether the license won’t be a barrier to entry for many smaller (but probably also larger) companies, Ones whose after the initial “capacity planning,” decide to check out other solutions or an alternative architecture in general. There’s a reason why only banks and large entities typically use OracleDB.

Interestingly, this move affects more than just direct Akka users. For example, Apache Flink, which uses Akka in its internals, informed their community that the project will remain on open version 2.6. In the meantime, they will consider what to do next. There will probably be more similar problems in community.

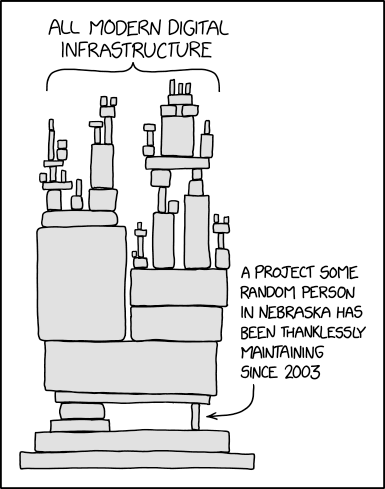

Also, at the end, I have one more interesting, the non-JVM link for you. There has been a long-running discussion in the community about ways to fund large and small open-source projects. It turns out that, as a fairly creative industry, we’ve developed some models. A great aggregator of these is a repository with the charming name Lemonade Stand, (harkening back to the best capitalist traditions). Of course, I do not suggest that Akka should use these tactics to fund the project maintenance. Still, I suspect that our readers with any open source projects will find something there to allow the community compensates for the time and effort they put in.

PS: VirtusLab – the company where I work – is a Lightbend Partner. Everything you have read above is my private thoughts and should not be taken as a position of the organization as such.

Sources

- Lightbend Changes its Software Licensing Model for Akka Technology

- Regarding Akka’s licensing change

- A handy guide to financial support for open source

- Why We Are Changing the License for Akka

4. Release Radar

I was away for two weeks, and this meant that not only did a lot of things happen, but there was also a turnover of new releases of popular projects.

Scala 3.2

Since there was so much about Akka today, let’s start with Scala. For the community hit by mentioned pricy licensing decisions, some balm was the release of the new Scala 3.2.0. Indeed, it brings quite a few interesting new features.

First, all those whose favorite metric is code coverage by tests will be pleased. The most popular Scala plugin for doing this back in Scala 2 days – Scoverage – relies heavily on the compiler outpoint, which has changed in the new Scala. Fortunately, Scala 3.2 introduces not only the generation of the necessary output but also a sbt'splugin for orchestrating the entire process. An additional tooling-related thing is the introduction of a new -Vprofile flag that generates statistics on source code complexity.

The new Scala also brings a lot of syntax, giving Scala developers even more power: better code hinting, syntax jars for extension functions or for-comprehension, and many more. But for that, I suggest you check the full release notes.

Quarkus 2.12.0

The new minor version of Quarkus – 2.12.0 – is not so “minor,”, especially for GraalVM and Kotlin users. After all, those two have been updated to versions 22.2 and 1.7, respectively, so we can take full advantage of the benefits of these particular editions. The most interesting of which, in my opinion (respectively), will be smaller image sizes for GraalVM and better incremental compilation support for Kotlin. Microsoft SQL Server users, meanwhile, got updates to the JDBC driver.

If you don’t use any of the above, the only thing the new release has to offer is support for Secret Keys in configuration files – a feature made available by the SmallRye Config release.

Helidon Níma Alpha Version

And finally, the real star – the official announcement of Helidon Níma. Yes, the same Helidon Níma we wrote about in the context of the “scoop” from EclipseConf, and which finally lived to see an official announcement, and… a name. For it turns out that Nima is actually Níma.

For now, an alpha version has been released, and we will still have to wait a bit for the full one. Developers have announced that work on a stable release will be finalized more or less by the end of next year, in parallel to Helidon 4.0. For such an early stage of the project, we have already received quite a few technical details – the companion publication by Thomas Langer focuses on how Níma at this stage compares to both Microproof Helidon, as well as the direct competitor – Netty. As you can read, one of Níma’s goals is to drive Netty entirely out of the Helidon ecosystem.

I’m not going on vacation anymore 😅 It’s enough that I’m gone for a while, and then I dig myself out for two days from the topics, and I still haven’t exhausted everything… But we’ll save that for next week.