In today’s edition, we’ll talk ourselves through the problems of voice assistants, a new tool from the creator of brew, and why no one loves Stable Diffussion 2.0.

1 Voice assistants a failed experiment? Amazon and Google are quietly withdrawing from the market

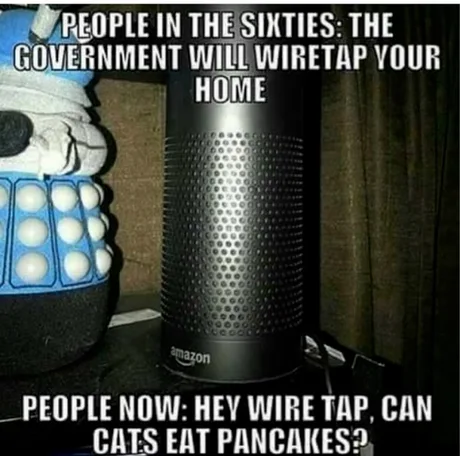

It’s no secret that the market is shrinking now, especially compared to the boom that 2021 brought. But what does the fact that such an Amazon is laying off 10,000 people mean in practice? On the one hand, there are a lot of talks that the companies were just heavily bloated and the current situation allows them to get rid of unprofitable departments, projects, and people easily, and quickly. On the other hand, such deep cuts are associated with a reduction in investments, especially those far from monetization. And when the two hypotheses come together halfway, they will give us a much better understanding of what is happening to the voice assistant market.

Something that has been rumored for years has finally been made official – Amazon Alexa is a failure, a bottomless bag, and with the recent layoffs at Amazon, it is this division that has been gutted the most. In the first quarter of this year alone, this Alexa division lost $3 billion, and so as soon as layoffs began, it was cut heavil. It turns out that despite nearly a decade on the market, Amazon never managed to monetize the voice assistant. The Alexa team sold its – genuinely fantastic – Echo hardware at a loss, only to make it up later in other ways. A bit like it is with printers, game consoles, or Kindle readers. Unfortunately, Amazon has never managed to find an effective way to monetize its loss-making technology.

So what, an opportunity opens up for competitors to take over a sizable chunk of the market from the existing leader? In better times, that would probably be the case, but the number two player – namely Google Assistant – doesn’t seem interested in doing so. After all, back in October there were already signals that Google was going to cut spending on both its own Pixel-branded hardware and Assistant specifically. Such was eventually to become the center of our interaction with technology, and it never really emerged from the narrow niche of operating home devices. Simply put, Voice Assistants never got any “Killer App.”

Sadness. I’ll admit that I found the vision of futuristic assistants and Jetsons-like smart space eminently tempting. However, I have a feeling that that time simply hasn’t come yet, and given how much money has been poured into these ecosystems with meager returns – it may be that by the time the next time anyone gets behind the topic with as much money as in the last decade, it will already be in my great-grandchildren’s time.

Sources

- Amazon Alexa is a “colossal failure,” on pace to lose $10 billion this year

- Report: Google “doubles down” on Pixel hardware, cuts Google Assistant support

2. Tea – (non)Package Manager to rule them all

As you’re probably making its way through these reviews, I’m an (I’ll admit, quite satisfied) macOS user. Certainly contributing to said contentment is a tool called brew. For those who don’t know it – it’s a community package manager, which is probably my favorite tool of its kind on any system so far and is always the first thing I install right after any fresh system installation. That’s why when I heard about tea, a new tool from the brew developers, I couldn’t deny myself a taste its poison (pun intended).

What is tea cli? As the developers write, instead of a package manager, we are dealing with a unified package infrastructure. What does this mean in practice? tea has been written in such a way that it can use managers of other ecosystems underneath, so it provides a common abstraction over other packages. In addition, it introduces the concept of virtual environments and package isolation into everything, a concept probably familiar to at least all Python programmers.

What’s controversial about the whole project is that the creator is trying to fit it into the whole movement happening around Web3 and implement a blockchain-based protocol for rewarding Open-Source developers by writing on the chain of “value redistribution” that is the use of Open-Source software by for-profit companies. The idea is laudable, but whether they will ultimately succeed, time will tell. After the spectacular FTX update, it’s probably not a good time to promote themselves with decentralization and distributed ledgers. However, if you are curious about what the developers have in mind – you can read the whitepaper.

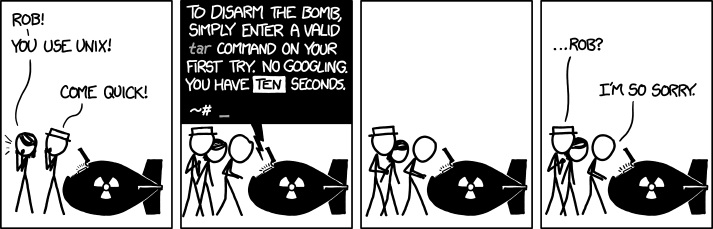

Continuing with the theme of shell tools, I wanted to toss you another one that fell into my hands last week. Cheat.sh is not some new project, but I’ll admit I hadn’t heard of it before, and it seems to solve a really common problem – no one can remember all those flags is often necessary to use shell programs correctly. I myself have been using tldr.sh for years, but cheat.sh seems like an interesting alternative to it, with a ton of useful integrations.

However, not everyone likes to use a terminal, so perhaps there are some “windowed” alternatives? Last week I came across the text How I’m a Productive Programmer With a Memory of a Fruit Fly, which I wanted to share with you. It describes the Dash – an aggregator and search engine for developer documentation. Dash was one of the first tools I ever installed on macOS, only to then basically never get around to it and stop using it. You’ll find the whole thing on any “best software for developers” list, but personally, I always felt overwhelmed by it and it never became part of my daily workflow. Reading Hynek Schlawack’s text, I have a feeling that this is due to the fact that I never put the right amount of heart into it. The article itself presents such an amount of useful tricks and integrations that I think I’ll give the tool a second chance.

But let’s be honest – in an era of ubiquitous AI, cheat.sh and Dash seems to be the technology of A.D. 2010. To wrap up the topic of new console tools, at this week’s GitHub Next conference, the company showed off a Github Copilot CLI. The new edition, instead of writing code for us, is supposed to tell us what command we should use to perform specific actions in the terminal. It’s not a totally new feature – I myself use the Warp terminal, which has such a built-in capability – but for those who don’t want to get rid of a familiar tool just to have AI tell them what parameters to use to properly unpack an archive, they should be pleased.

Sources

- tea cli

- A Decentralized Protocol for Remunerating – the Open-Source Ecosystem

- How I’m a Productive Programmer With a Memory of a Fruit Fly

- Github Copilot CLI

- Cheat.sh

3 Why everyone hates Stable Diffusion 2.0?

It was about Copilot, now let’s smoothly move on to generative graphics models, because a lot is happening in that space.

My beloved MidJourney, without much hype, has released a new version of its model (–v 4) and this one is simply amazing. When I compare what I tested about six months ago and what is possible today, it makes me feel a little.. dizzy. To illustrate to you what kind of leap we are talking about:

donald duck in style of hr gigervs

Well what can I tell you, I only have one reaction to this: 🤯

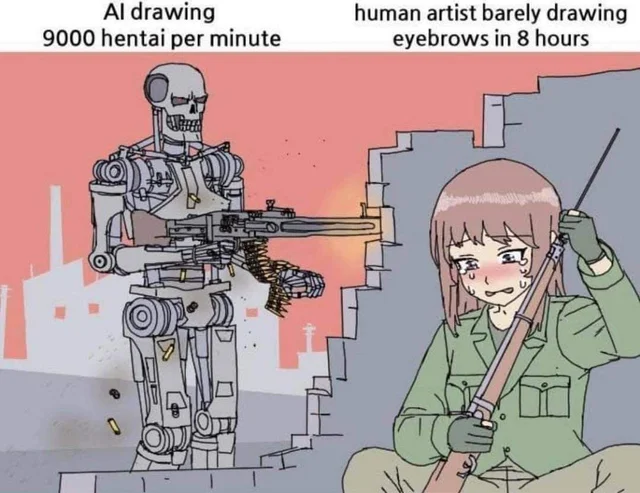

It also immediately makes me wonder about the fate of all conceptual graphic designers. These, by the way, are also noticing the problem. The call to take legal action against the creators of algorithms that use their output to learn what graphics should look like is getting louder and louder.

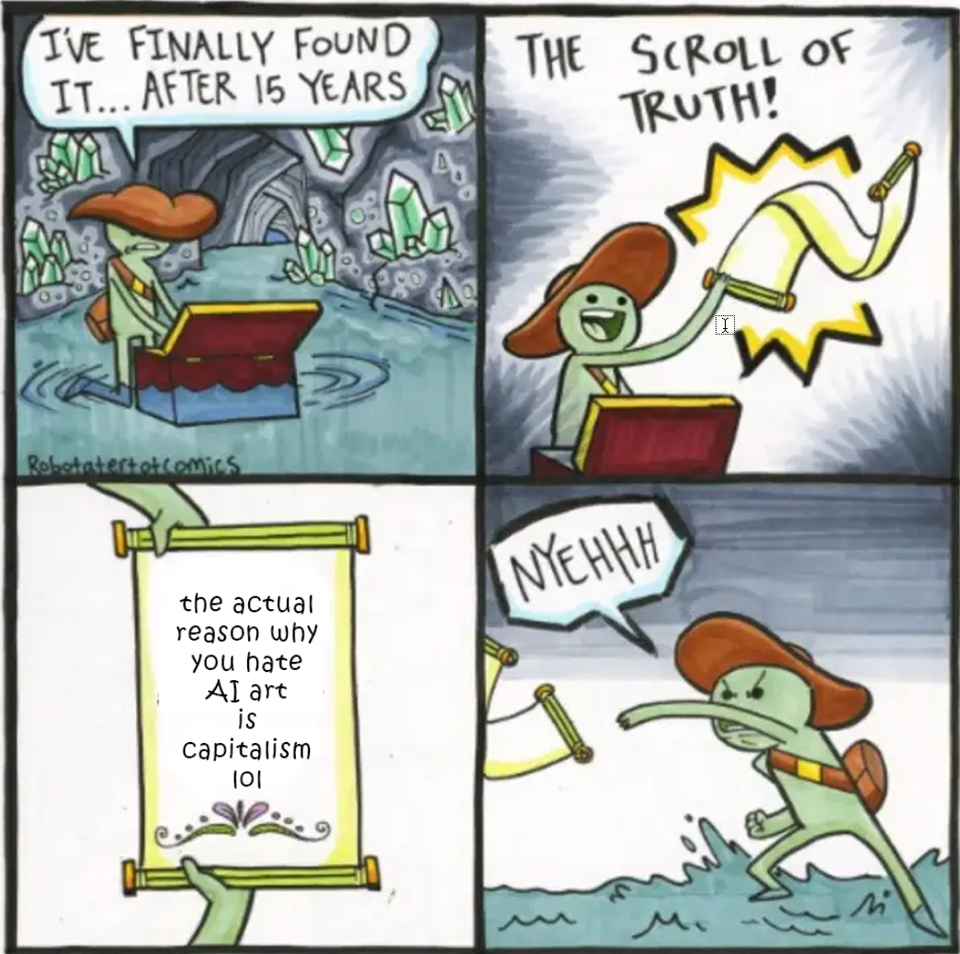

Algorithm developers, feeling the problem with their noses, decide to make a run for it – and not all users of their products are happy.

Last week saw the second release of the Stable Diffusion model. Aside from additional features such as in-painting and super-resolution, the most significant changes came under the hood. Until now, basically, all models used the CLIP encoder underneath – a set of features created by OpenAI. However, CLIP had a transparency problem – as the datasets on which it was trained were not really known. This made it possible for the aforementioned artists to guess that their work was being used for learning – which could be seen, for example, by the fact that by specifying a particular artist in the prompt for the model (as in my case above with HR Giger), we were getting work very close to his unique style. Stable Diffusion, in creating OpenCLIP – its new encoder – admittedly did not get rid of all the copyrighted work. But first – it threw out the labeling of specific images with the data of their creators (thus ceasing to work “in style of”), and second – it made it possible to report specific parts of the dataset for deletion if the creator feels that his copyright has been violated. And third, it got rid of most of the NSFW graphics – Stable Diffusion has been significantly “gentrified” from the original. More details can be found in my new favorite newsletter, The Algorithmic Bridge.

The funniest thing about the whole situation is that no one (except Stable Diffusion’s lawyers) is really happy with the change. Stable Diffusion users are complaining about the censorship and the fact that instead of getting better, the model has gotten worse from their perspective. The move probably won’t entirely satisfy artists either – the fact that the models were stealing their individual style was certainly problematic, but actually, a much bigger challenge is the fact that generative models exist. These learn quickly and need fewer and fewer samples to improve further. Until now, they could be accused of violating the copyright law, but the Stable Diffusion movement and transparency of use further knock out their arguments. And what with the fact that the model today is slightly inferior to its predecessor? You’ll see what happens in six months…